After-Action Report: Reflect, Revise, Repeat

This story is also published on Medium.

We’ve been walking through our product development process with you these past few months: first we shared our Problem Brief, designed to produce a product hypothesis, and then our Design Brief, which outlines your proposed solution.

The final piece in our tactical trio of product development templates is the After-Action Report (AAR). Since breaking down the anatomy of a typical AAR here at Heap, we’ve had some key learnings that have transformed our AAR process for the better.

Here’s what we changed and why we changed it.

To download our new After-Action Report, go here.

After-Action Report: new and improved

What did we ship?

For people who don’t have context on your project, provide a quick reminder of what you added or changed in your product. It’s important to keep reiterating the “why” for any initiative — what problem are we aiming to solve? Why is that problem important? Whom are we solving that problem for?

Relevant documents

At this stage of a project, you should have a number of other relevant documents, most importantly the Problem Brief and the Design Brief, which provide more detailed justifications for the project, as well as a set of user stories.

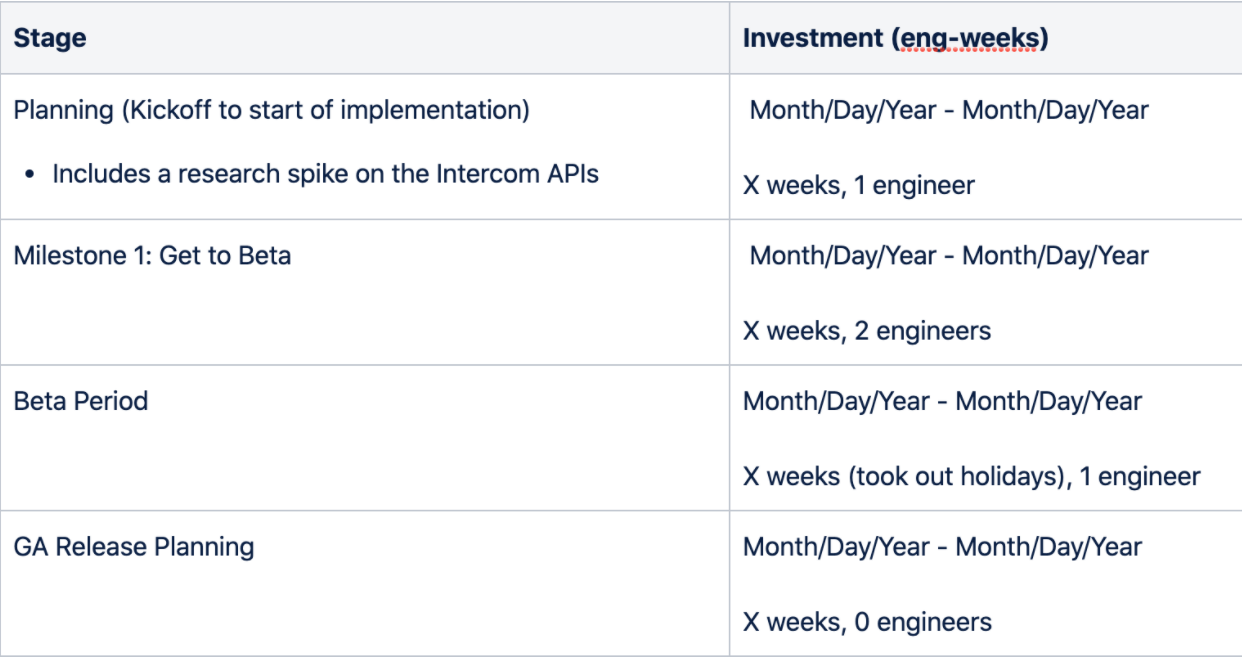

Engineering investment

We’ve updated this section since the last time we blogged about the After-Action Report. Previously, we’d just list out our milestones and share “wall clock time” or how long in weeks a project took. Since then we’ve realized that capturing person-weeks is more useful for evaluating return on investment for the project.

Here are some things we look for when reviewing this section of an after-action report:

Were there milestones that took an especially long time?

Could we have shipped the project without milestones that were a particularly large investment (getting a similar return for much less investment)?

Could we have tested more of our solution sooner with customers if we had ordered our milestones differently?

I encourage anyone who uses this template to dig into these questions in the “What would we do differently?” section. The goal here is to build pattern recognition as a team. That way, when you see similar projects in the future, you will have the collective instinct to pause and say “maybe we should try a different sequence of milestones here” or “maybe we can try a more lean first iteration.”

What were our hypotheses?

This section has also evolved, significantly. Now that we’ve updated our templates so that they share the same language for hypotheses, we now literally copy/paste hypotheses from one document to the other. We also make sure to call out deviations from key metrics that may have happened over the course of the project (e.g. a baseline that we realized was previously incorrect).

In our updated template, we standardize our sentence structure for hypotheses.

[user / account segment] experiences [negative outcome] because [reason], so we expect that [specific product change] will cause [measurable, specific behavior change]

Let’s dive into each part of this sentence:

[user / account segment] makes sure that we think concretely about who we expect to utilize this feature. Not all features are for all users, and it’s easy to create weak hypotheses based on an overly diluted or constrained definition of the target audience for a new feature or update to an existing feature.

[negative outcome] because [reason] reiterates the problem we are solving and ideally points to a measurable negative behavior (e.g. running into an error state or not taking advantage of a feature).

[specific product change] puts the solution we came up with in the context of the behavior change we aim to drive (e.g. streamlining a workflow to increase completion rate).

[measurable, specific behavior change] is the actual success metric, but instead of just listing it, we have put it in the context of a set of users and the specific change in behavior that we aim to drive with a specific change to our product.

The goal of this format is to avoid the problem of “success metrics in a vacuum,” and instead create a culture of iterating on the product to drive specific changes in behavior, being thoughtful about what those desired changes are, and then reflecting on how successful we were in driving those changes by changing the product.

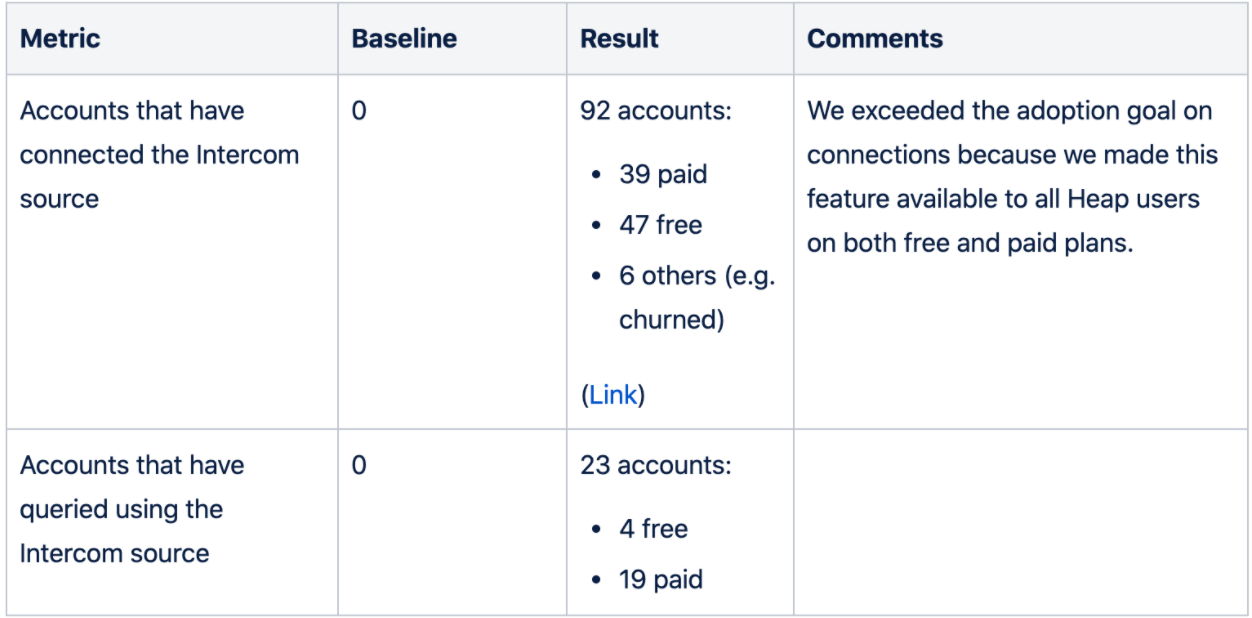

What was the result?

For all leading metrics specified in the hypothesis, describe the results. When possible, link to the relevant Heap report or dashboard with each result. If a metric either didn’t move or moved in an unexpected direction, provide a hypothesis of why this may have been the case (with any supporting reasoning).

This section is the meat of the After-Action Report. In it we make sure to provide three types of information:

Shift in KPIs: Whenever we start a project, we write a Problem Brief that describes the problem we are trying to solve, why we are solving it, and what user stories we expect to enable in our product. We also determine the KPIs we will use to measure success, and establish the baselines for those KPIs. In this section, you simply compare your pre-launch baseline to your new measurements, and call out the major increases, decreases, or “neutral” or unclear impacts.

Anecdotes and customer quotes: Did we get any specific praise or negative feedback from customers? Were there any notable success stories? This is helpful for context, but more importantly it humanizes our work — there are real people using the things we make, and it’s important not to forget that.

Deep dive into changes: It is unlikely that you will know the full impact of a product change before you actually ship it, so it is critical that you are able to formulate and answer new, unplanned questions on the fly. I make extensive use of Heap for this because behavioral insights sit on top of an auto-captured, retroactive dataset. This means that I can “define” a new behavioral event and automatically access the historical click data associated with that event, instead of waiting weeks to get an answer.

There are a few questions we like to answer when diving into the impact of a launch:

How discoverable was this feature in the product? You can answer this by looking at the number of users who see any of the entry points to your feature.

Did users actually discover your feature? You can answer this by using a funnel with an event that denotes viewing any entry point to your feature and an event that denotes starting to use the feature.

Did users make it through the feature successfully? You can answer this by making a funnel that includes an event that denotes starting to use the feature and an event that denotes successfully completing a workflow with the feature. The more steps you have in between, the higher resolution you get on where users might be running into roadblocks.

What impact did the feature have on product retention? For a SaaS product, there are usually one or more value-driving actions (strong signals that users are getting value out of your product). An easy way to understand if your launch helped improve retention is to graph retention of these value-driving actions, broken-down by whether or not the user successfully completed a workflow with your feature. An improvement results in a retention curve that is shifted upward.

Next steps

Based on the findings in this report, describe any specific next steps (follow-up projects, etc.) and link to any documentation on these projects.

It’s important to recognize that projects are never “finished” — there is always some opportunity to polish or improve anything you ship, but the key question is whether or not the return on investment in that improvement is high enough vs. alternative projects.

Here are some questions to ask as you fill in this section:

Were there consistent objections, gaps, or feature requests that came up in customer anecdotes that we can address in a future iteration?

If we had discoverability issues, are there improvements we could make so that the right users are more likely to organically find this feature?

If users struggled to complete the steps of using the feature, what are our hypotheses around reducing that friction?

What would we do differently?

We’ve blended two sections of our previous after-action report template, “Conclusions” and “How can we improve?,” into a single “What would we do differently?” section.

The goal of this section is to provide transparency for your team and company about your learnings from this project, now that you know what your hypotheses were going in, what impact you ended up delivering, and what the cost of that impact was in terms of resources that could have been applied toward other projects.

Some questions to consider as you write this section:

Could you have framed this problem differently? (Look to your qualitative customer feedback and impact on metrics to help answer this question.)

Could you have delivered a solution more efficiently? (Look at your impact on metrics and summary of investment to answer this question.)

Was this the right solution? (If you believe that you had the right framing and hypothesis but you didn’t drive the desired impact, this might be the case, and that’s ok to admit!)

This is probably one of the hardest sections for PMs to write. It requires vulnerability in front of stakeholders (and potentially your whole company). But I think you’ll find (as we have) that this kind of exercise actually builds trust with your team because you are shepherding collective learning about product-market fit, which is a huge part of what it means to be a great PM.

Put into practice: an example

We recently shipped a source integration with Intercom. Here’s how we filled out our After-Action Report template.

What did we ship?

We launched an Intercom integration that brings Intercom chat conversation events into Heap. PMs can use it to analyze the effectiveness of Intercom messages on conversion rate and retention. In particular, the source adds 4 events:

Start Inbound Conversation

Reply to Conversation

Conversation was Closed

Rate Conversation

We intentionally did not include support for other Intercom features like Help Center or Product Tours. The scope was determined during customer discovery where we found that chat is the most frequently used Intercom feature.

Relevant documents

Problem Brief

Engineering Spec

Project Retrospective

External Docs

Engineering investment

To help us understand the ROI of this project, break down the effort (time spent x staffing) in planning and then each milestone of development from kickoff to release.

Engineering investment - After-Action Report Example

Total: Number of engineering weeks

What were our hypotheses?

Results - After-Action Report Example

Anecdotes / customer quotes / solutions sentiment:

“Using Heap + Intercom, we saw that visitors on our “Competitor Alternative” pages were far more likely to start a chat than anywhere else on the site. As a result, we changed some copy on the page and added a proactive Intercom popup to promote conversation. We’ve seen a ton of engagement.”

Customers who do a lot of optimization around Intercom chat placement like this feature. Customers use this integration, because they want to answer questions like “how did chat impact conversions or other site behaviors?” which is hard to answer with other solutions today.

Users really want this integration to include Help Center events, so they can see who is reading docs and then completing some behavior on their site. This wasn’t in scope for this iteration, but there is high demand for this capability. Unfortunately, Intercom’s API doesn’t include Help Center access, so we will need Intercom’s team to add support before we can proceed. They are aware of this request.

What are the next steps?

There is a lot of interest in this source as seen from the high connection numbers, but activation rate is low (~50% of paid accounts connect but doesn’t query using the Intercom events). We need to address the known friction in the integration setup process.

What would we do differently?

We would evaluate the end-to-end user experience and identify friction points that can impact adoption early. This would likely result in expanding scope to include some setup features that improve adoption by guiding users better.

We also need to do better technical enablement on these integration features by conducting more technical hands-on training with the Solutions team.

Download the template for your next retrospective

Ready to try your hand at the After-Action Report? Download our template and let us know how it goes via feedback@heap.io.

We’d love to hear what works and what doesn’t. We look forward to the insights you uncover and the future iterations of this process.