Data-Informed

PMs: Clarify the Problem, Not the Solution. Here's How.

PMs, let’s take a quick survey. If I asked what the typical division of labor between Product Managers and engineers should be, how would you answer?

My guess is that many would say something like “Product Managers list requirements and engineers build them.” As common as that notion is, I’m here to say: it’s WRONG. Why? Because a key (arguably the key) role of a PM is to frame problems to solve and lead cross-functional teams to come up with impactful solutions — not to define a solution up-front.

You are not the CEO of a feature

The traditional tool for a PM to align teams around a customer problem and a solution has been the “Product Requirements Document,” or PRD. I’ve written many of these, and I’m here to warn you there’s a subtle trap that many a PM regularly falls into. That trap is to conflate the question “what problem are we solving?” with the question “what is our solution?” The problem with this approach is that it restricts the space of solutions to only the ideas in the PM’s head, instead of opening up that space to the broader set of ideas that come from a cross-functional team of engineers, designers, and customer-facing teams. This is not to say that you can’t participate in creating a solution, but that the team will always have a better set of ideas to choose from than just one person.

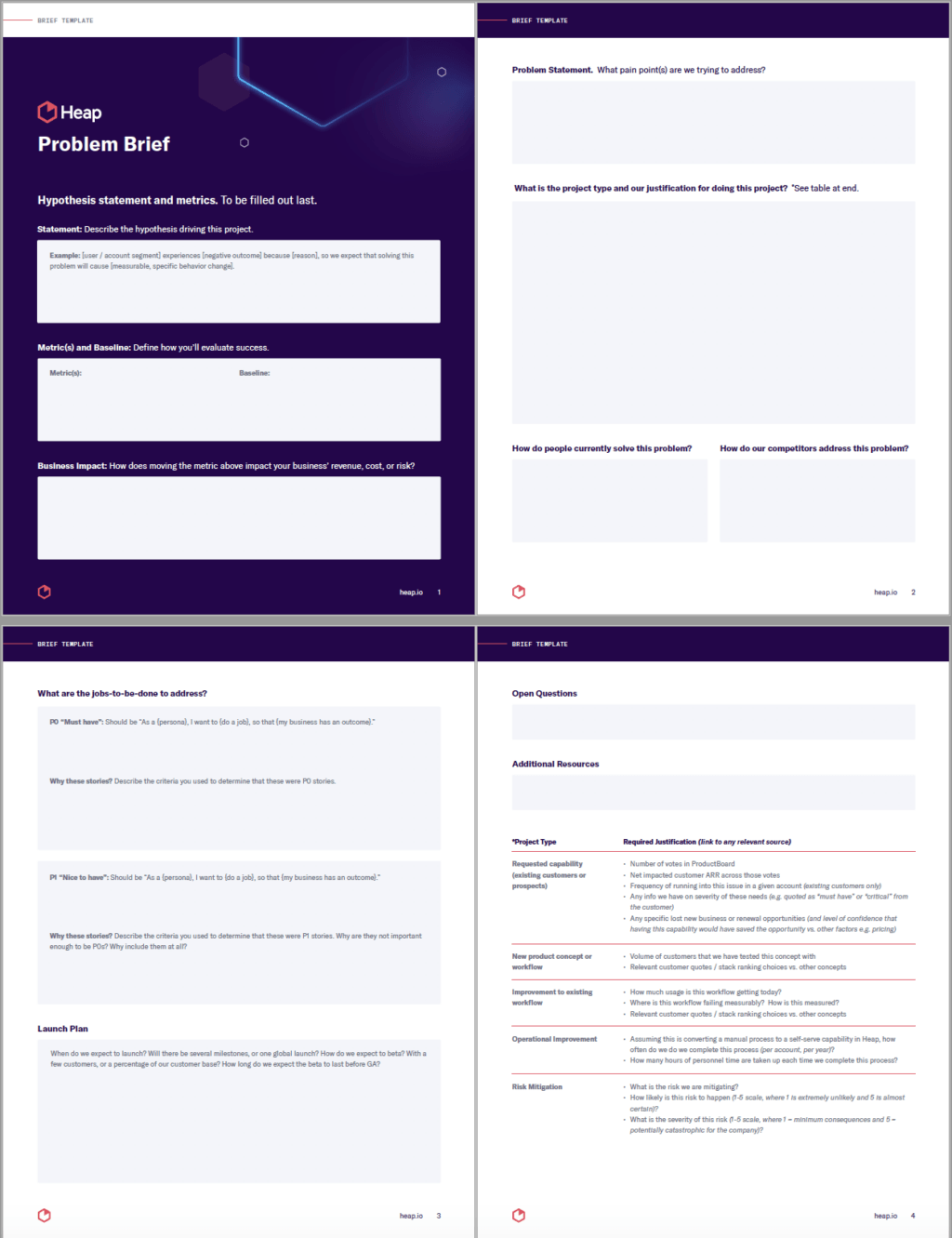

At Heap, we have a ritual at the start of every project called a Kickoff meeting. In this meeting, we have the engineers who will likely be working on the project, the PM, the designer working on the project, and at least 1-2 team members from our customer success teams. The goal of this meeting is to align on the content of what we call a “Problem Brief”: a document that lays out the problem to be solved and argues for why we should choose to solve this particular problem.

Download the Problem Brief here

At Heap, our Problem Briefs are designed to produce a Product Hypothesis. This is a statement that spells out the problem we’re trying to solve, and hypothesizes about what the impact of solving it will be. Ideally it follows the following structure:

[user / account segment] experiences [negative outcome] because [reason], so we expect that [specific product change] will cause [measurable, specific behavior change]

The key is that we do all of this work – aligning a cross-functional team, creating shared customer context on a problem, making a measurable hypothesis – without prescribing a solution. Solution ideation comes later.

We’ve spent lots of time working through and thinking about this process, and we’ve come to believe it’s a better way to do things. Here’s how we got there, and how we’ve designed our Problem Briefs to help.

How to frame a problem

At Heap, framing a problem means codifying a set of customer context around that problem, including what matters to the customer, why this problem relates to what matters to them, which segments of customers have the problem, how customers work around the problem, how our competitors solve the problem, and how we will know that we’ve successfully solved the problem. (Quick note: Providing evidence that the problem exists and is painful goes a long way in driving alignment. At Heap, we do that through a mix of using quantitative data and showing session replays.)

As a Product team, our job is not only to do this over and over again, but also to make prioritization decisions — that is, compare the investment in solving one problem against the investment in solving another and stack rank these investments. This means that it’s important to be able to compare multiple investments and identify what we believe will have the greatest impact.

Problem Briefs are Heap’s solution to this problem — they templatize the process of framing customer problems, nudging PMs to make apples-to-apples comparisons between investments, emphasize impact, and think of their ideas as hypotheses.

Justifying our investment

When we first created Problem Briefs, we had a big open section that said “Does this solve a high-level business problem for us?” This led to our PMs (myself included!) writing prose of various length, throwing together a mix of individual customer anecdotes, internal sales team quotes, and more. This hodgepodge made comparing the “why” across investments a nightmare for PMs and our leadership team.

Here’s an example answer to that question written by yours truly in 2018:

Data trust is critical for Heap to gain adoption in the Enterprise market, as the problem of ensuring a trustworthy dataset is increasingly important for larger teams.

Even though I wrote it, I’ll be the first one to tell you that this description tells us nothing. It doesn’t get specific about the number of customers who have indicated a challenge around managing a messy account. Nor does it quantify the hours that our support and professional services team spend on “data audits” to manually clean up the accounts of high-value customers.

Open-ended questions (and the ability to write whatever you want as a justification) prevented us from making objective, apples-to-apples comparisons between opportunities — in other words, it made it harder for us to prioritize!

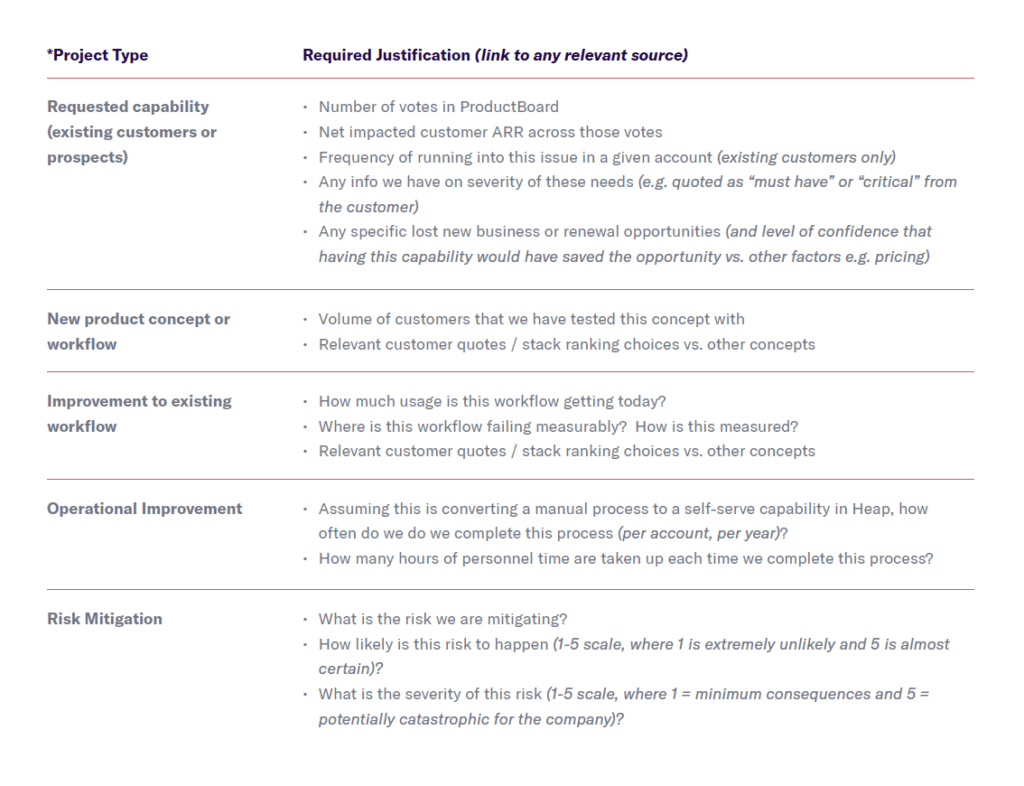

Looking back at a year of past launches, we realized that there were really only 5 types of justifications for product changes we would make:

Requested capability (justified by a number of customer requests and impacted revenue summed across those customers)

Update to an existing workflow (justified by measurably bad performance in an existing workflow)

New workflow (justified by early stage concept tests, even with slideware, that indicate value for customers)

Operational improvement (justified by hours of personnel time saved)

Risk mitigation (justified by the likelihood and severity of the risk)

These justifications all relate to driving business impact: increasing revenue (via improved customer happiness or competitive advantage), decreasing cost (via operational improvements), or decreasing risk (reducing the chances of adverse events). Each of these justifications can also be presented in a consistent manner across projects — this is the key to being able to get apples-to-apples comparisons and streamlined prioritization. For example, with requested capabilities, we should always strive to answer “who asked for it? What is the affected revenue?” Instead of waiting for leadership to ask these questions, we use this template to encourage PMs to anticipate and answer these questions up-front.

Doing the research

It is great to be creative and add new workflows in your product that customers didn’t directly ask for. However, in our experience too many PMs do too much new feature work and not enough homework on customers. (This is one of the main reasons products end up being crammed with unused features.) Yes, we can all cite Henry Ford’s “If I had asked people what they wanted, they would have said faster horses.”, Great, but in my experience this ends up being the single most referenced crutch for intellectual laziness in all of product management.

When bringing new concepts to the table, DO. YOUR. HOMEWORK. Have a hunch that customers are frustrated by features in your product? Verify that hunch! Identify a segment of users who used those features and send out a targeted survey to better understand their challenges. You can still practice creative, blue-sky thinking while also being rigorous and scrappy with customer research.

Planning for internal impact

Operational improvement projects are usually a matter of saving internal time spent on various tasks. An area that is top of mind for Heap right now is granular time tracking for our support team — we’ll let you know when we figure it out, and if there’s a great SaaS tool that solves this problem, please reach out! We’d love to partner with you!

When mitigating a risk, usually you are aiming to decrease the likelihood of some adverse happening or reduce the severity of that issue when it does happen. A handy way to prioritize across these is to score risks on a 1-5 scale, for likelihood and severity. This can be especially helpful for security investments that don’t present a major problem until they ARE a major problem.

Why look at customers’ current workarounds?

Asking about workarounds is one of the most valuable forms of customer research when it comes to revealing true need. When in doubt, the relative pain of a customer’s workaround can be a great ranking signal for projects

Here’s a tale of two feature requests: A customer says that they want an integration with another tool. You spend months implementing that integration and close the loop with the customer. But then … you don’t see the adoption you were expecting. When you ask the customer, they say, it was simply “not a priority at the moment.”

What went wrong? You did a good job of pinpointing a customer problem. But you didn’t do the work to figure out how much of a problem it was.

Now consider the same request, but when you ask about current workarounds, the customer says “I download data from your tool in a CSV file, then upload it into another tool manually. It takes me 30 minutes and I have to do this every week.” Now you know that this problem causes significant, quantifiable, front-of-mind pain. Your integration will save the customer tangible hours every month, and they’ll be MUCH more likely to adopt your integration.

Why look at competitor solutions?

This will vary from industry to industry, but a common scenario especially in the world of enterprise software is that you are competing against other organizations for the same customers. When deciding what the minimum viable product is to solve a customer problem, it is also worth considering how your competitors address the problem. This can save you time thinking of solutions and in some cases (e.g. when competitors unlaunch a capability related to the problem you’re solving) you can even learn from their mistakes.

Ultimately, you want to have a good enough solution that you are confident you are solving the customer’s problem. Importantly this does not mean that you have to build 100% of what your competitors built. In fact, if you do this and these solutions already exist, you are going to always be behind your competitors.

Why prioritize user stories?

The “meat” when framing a customer problem is the “user story.” These usually come in the following format:

“As a {persona}, I want to {do a job}, so that {my business has an outcome}.”

A common mistake that I’ve seen product managers run into is constructing a flat list of “requirements” without any signal on the relative ranking of these user stories. When you start engineering work on the project, inevitably the time comes to make a tradeoff: push the launch date to include functionality that addresses a specific user story, or launch without it and add it as a follow-up later?

It’s crucial to know before these decisions come up which capabilities are “must have” vs “nice to have.” I’ve often heard that there needs to be a “healthy tension” between PMs asking for more scope and engineers saying that it can’t be done. This can lead to teams being intellectually lazy and not gathering customer context that can easily provide objective answers to these questions.

There are many techniques to capture this information. One I use often is surveying customers about their needs before starting a project and asking, “how critical is this capability to your use case?,” with a 1-7 scale (from ‘not relevant’ to ‘critical’) for each of a candidate set of user stories. Other useful questions include “how often would you use this capability?” or asking “how much time do you spend working around this limitation?” This allows you to provide glanceable, actionable customer context to inform these decisions and align teams around the “viable” in “minimum viable product”.

How can you use these documents to become hypothesis driven?

As we say above, the goal of collecting and organizing this information is to make a Product Hypothesis. It’s important to make your hypothesis measurable. That is, if your hypothesis is that “we will solve the customer problem,” how will you know if you succeeded vs failed?

Ideally, when making product changes, you will be creating or changing a specific user behavior. This usually manifests as one of:

Creating a new capability that gets adopted and repeatedly used (repeat usage is important because it’s a proxy for getting value out of a capability)

Improving an existing workflow, as measured by completion rate from the start to finish of that workflow (and any relevant intermediate steps)

Increasing the volume or quantity of some positive action (e.g. purchase or order volume) made in a given period of time or user session

Reduction in some number of adverse events such as support tickets

Usually you can form a hypothesis with the following sentence structure for consistency and readability:

[user / account segment] experiences [negative outcome] because [reason], so we expect that [specific product change] will cause [measurable, specific behavior change]

Ideally, at the start of the project you can quantify both those negative outcomes (e.g. support ticket request, failure rate of complete a workflow, low adoption / retention rate of a feature) and establish a baseline for the behavior that you want to measurably change

In the cases when you are creating a new surface or product feature, you may not be able to explicitly establish a baseline or define a specific action — this is fine, but in this case you should return back to this document and update it before releasing your feature to make sure that you have a measurable definition of success.

When communicating a hypothesis and a metric, it’s also important to flag when this metric should be interpreted — is this a leading metric, which will sensitively increase as users are exposed to the product change? Or is it a lagging metric that will only truly manifest over months (e.g. seeing a decrease in monthly support tickets of a certain type)?

A major area where I’ve seen product managers struggle is defining what “good” is for a given metric and a given product change. Baselines are helpful here because they remove subjectivity and ground changes to existing product workflows in the reality of those workflows today. Targets for a metric require more nuance, and usually this is an important but skipped step when defining success for adding a new product feature. Just like sales teams have leads that then convert into conversations and closed deals, a product feature may start with a pipeline of interested customers.

An example of a related behavior and target is as follows:

Say you are creating a feature for “bulk user invite” and you observe that ~100 customers have at least one user in the last month who invited at least 5 users in one session. These users would likely benefit from a bulk invite feature, so let’s assume that if we build this feature, half of them will adopt it. That means our adoption target is ~50 customers for a bulk upload feature within 1 month of launch.

You can do similar analysis to create targets for repeat usage as well. When building integrations with other tools, a common technique for establishing baselines is identifying the number of customers who are also customers of the tool you are integrating with, then incorporating assumptions about how much of this “addressable market” you can actually reach. As you become more consistent with how you are forming your hypotheses, you can start to make better assumptions about this adoption rate.

As you focus your hypotheses on moving a small set of KPIs over a long period of time, you will start to see cumulative improvements in those KPIs and driving real impact!

Bringing it all together: how to kick off a project

As I stated at the beginning, projects here begin with a Kickoff. The structure of the meeting is simple: read through the Problem Brief and answer questions. This goal is to make sure that customer needs are clearly framed, the full space of possible solutions can be explored, and your cross-functional team is aligned on the “why” behind pursuing this project.

Once we feel aligned, we update the document and the team transitions from the problem space into the solution space, bringing in ideas from everyone involved. (This ideation may happen in the same meeting or spill over into additional brainstorming sessions.)

We hope this is a useful tool for all of you product leaders out there — give it a try and let us know what works and what doesn’t work. Coming soon: a post that explains the design component of this process, and that walks through our Design Brief.