Virtual Events: Making Data-Driven Decisions a Reality

Our mission is to make it possible for anyone to use data to make better decisions. It should be easy for people to be right, instead of making decisions based on gut or loudness of voice. This same mission can be said to apply to at least a dozen other analytics companies, some dating back to the 1990s. So, why does it feel like there has been so little progress towards making this a reality?

The Glorious Future

We’ve seen this story again and again: an executive is charged with empowering their team with data, so they set out on a data initiative. They buy some tools, hire data scientists, spend hundreds of hours in meetings settling on a tracking plan and working with the engineering team to get it implemented to spec. At long last, the dataset exists and the team can start answering questions.

But the resulting dataset is way too complicated for any PMs or marketers to use directly. The data team needs to spend the majority of their time maintaining tracking, ETL jobs, and a data warehouse. Nobody understands what, precisely, the events represent, and various parts of the dataset are broken at any given time, so nobody trusts the data enough to make important decisions based on it. The data is used for “directional” guidance, at best. Getting an actual analysis done requires that your request have enough organizational clout to become a priority for the data team, and there’s still a good chance a limitation in the tracking will prevent you from getting an answer. As a result, a small fraction of the questions that merit data attention actually get it.

Data teams spend most of their time maintaining and cleaning the dataset. They’re also the only people who understand the schema well enough to get any use out of the data. This is nobody’s fault – it’s a natural consequence of complex datasets and the fact that nobody else uses the data regularly enough to remember the details or keep up with the changes. But it can mean that the data team is reduced to being in-house schema experts. They alone know the arcana necessary to conduct simple analyses: which fields do we typically join these two tables on? Do we trust this column?

This is not the glorious future of data-driven decisions we were promised.

We should be using our data teams to configure our datasets – how users are modeled, how datasets are joined, and so forth. These configurations can be intricate, so it’s critical that they can be iterated upon. Our tools should use these configurations to produce easily understood and readily useful virtual datasets, which a wide range of people in our organization can use. And our data teams should spend the bulk of their time doing the sophisticated, deep analyses that only they can do instead of primarily keeping data clean and schemas up-to-date.

What Went Wrong?

Time and time again, in conversations with teams across organizations, we see the same problems holding back data teams and keeping analytics stacks from being useful:

People don’t understand how to navigate the datasets they are using

People don’t have the right data to answer their questions

The data isn’t trustworthy

Such a widespread common problem begs the question: What’s missing from the current tools? Why don’t they fulfill their potential?

First generation analytics products were built in the late 1990s around measuring page views. They let you track bounce rate, common referrers, and other high-level site usage statistics. The second generation of analytics tools was built in the late 2000s around events. Interactive web apps built with AJAX needed smarter primitives for measuring user engagement. The web 2.0 era merited a corresponding analytics 2.0.

But this new paradigm of event-based analytics is limited by a lot of new problems. What events do you track? How do you know if you’ve tracked them correctly? When you’re doing an analysis, how do you know what Checkout Flow 2 Confirm means? How do you keep your tracking up- to- date as your product evolves?

To move beyond these problems, we’ve introduced two new technologies at Heap. The first is automatic data capture, which produces a complete, raw dataset about your users. The driving force behind automatic data capture is the belief that instead of trying to figure out upfront which user interactions we care about and tracking each one, we should capture all of them. Automatic data capture means we will always have the dataset we need. It also creates the basis for the second technology we’ve implemented.

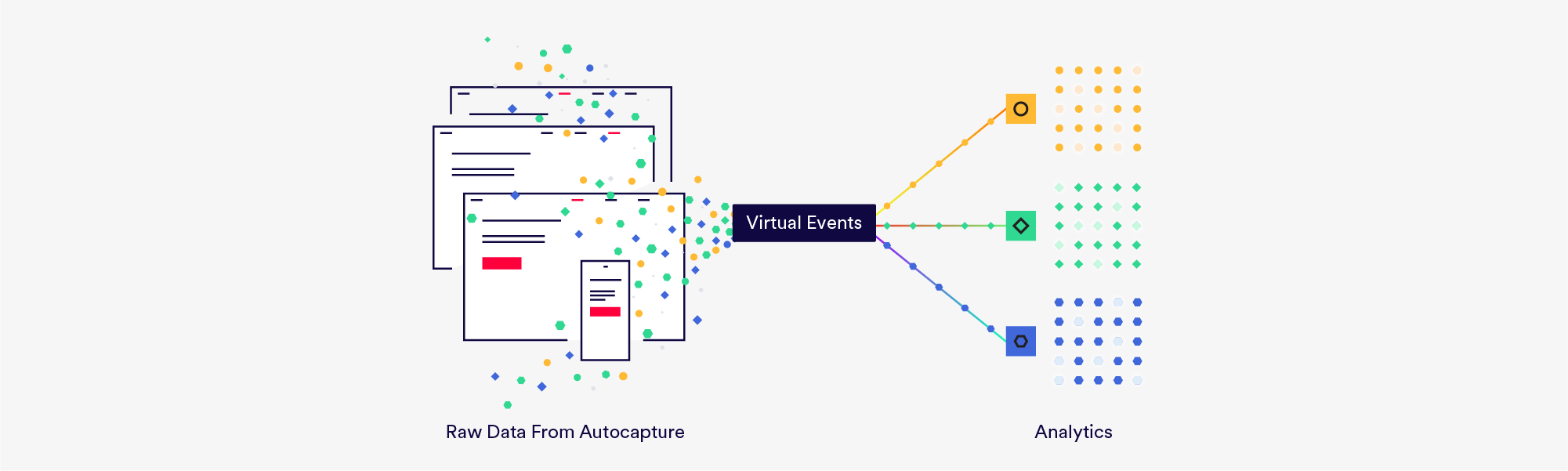

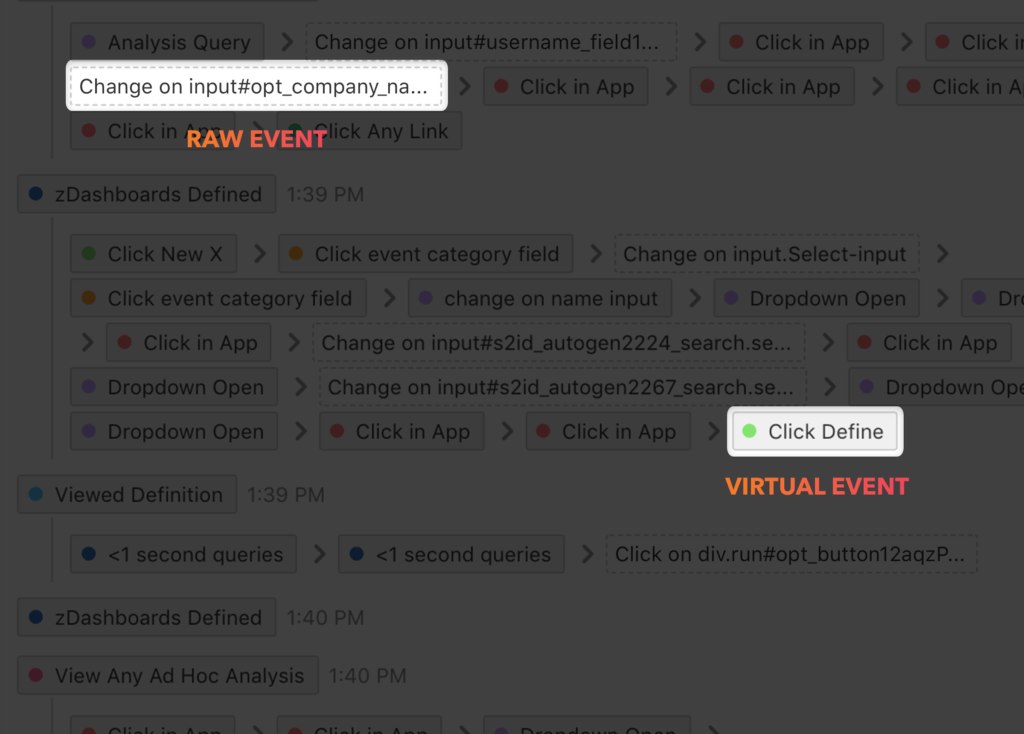

In contrast to second generation analytics tools, in which you explicitly track the events you care about, Heap is built around Virtual Events. A Virtual Event is like a regular event, except instead of logging it from your codebase, you define it in your analytics tool. Rather than writing tracking code to the effect of Data.track('checkout'), you define a Checkout to be a click on the relevant button on our site. Since all these clicks exist in your automatically captured dataset, our tool can provide you with a complete dataset of checkouts, as if you had been tracking it in a second generation analytics tool.

From the perspective of someone running an analysis, Virtual Events work the same way as traditional events. You use these Virtual Events the way you’d use manually tracked events in any other analytics tool. But under the hood, they are views on top of an underlying raw dataset. This is data virtualization, a layer of indirection between your raw data and the dataset that you analyze.

These two pillars – automatic data capture and data virtualization – are the fundamental technologies that will unlock the promise of user analytics.

Automatic Capture, Virtual Events

The most obvious problem with traditional events is that you have to decide upfront which ones you want to capture and analyze. When you want to add a new event to your dataset, you need to get it logged, which takes weeks if you’re lucky, and then you only have data going forward.

Virtualization solves this problem by letting you update your event schema on demand, retroactively. When you decide you’d like to know how watching your demo video affects conversion through your funnel, for example, you can create a new Virtual Event for Watch Demo Video and run your analysis on retroactive data – automatic capture means we’ve been tracking those interactions under the hood in raw form, and Virtual Events let us analyze those raw interactions.

You can think of this as analogous to virtual machines. Physical computers need to be provisioned ahead of time, but you can provision virtual machines on demand. Virtual Events work the same way.

This basic premise means your event schema is defined explicitly, within Heap, instead of implicitly, via tracking code scattered throughout your codebase.

Another common problem that blunts the impact of analytics tools is that nobody can understand the resulting dataset. An event schema that lives in your analytics tool makes it possible to interpret what you’re seeing when you run a report. Instead of asking an engineer what Shopping Flow Begin means, or consulting a tracking plan doc that was last updated 18 months ago, Heap can show you the exact button in your product that relates to that event. A traditional event is code in your product that yields rows in a table. A Virtual Event is a definition, and you can always ask the tool what it means.

When you use traditional events, the event schema is hardcoded into the events themselves. An event logged via Data.track('Signup Click') is fundamentally and immutably a Signup Click. Worse, that hardcoding occurs in your product’s code, out of reach of any analytics tool and almost everyone at your company! If you aren’t sure what Checkout Step 2 means, you’ll need to ask an engineer to grep the codebase. If you aren’t sure if you’re tracking an event correctly, you’ll need to get an engineer to track some additional events before you can sanity-check what you’re seeing. If your tracking code breaks, you will have a permanent, unfixable hole in your data – the dreaded Adobe Blackout.

Virtual Events are defined in your analytics platform, instead of in your codebase. That means you can audit your metrics, because your analytics platform can tell you what each event is, in concrete terms. This is critical – if you can’t determine what your metrics mean, you can’t even ask if they’re right or wrong, let alone be confident that they’re right. I.e., they’re not even wrong!

Virtual Events and the raw data they represent

Virtual Events and the raw data they represent

Iteration is Power

Virtual Events mean you can iterate on the schema you use to model what your users do. Traditional events come from your codebase, which means your event taxonomy is hardcoded into your product. As a result, your event schema is impractical to extend and impossible to keep up-to-date. Being able to iterate is the key to maintaining a complete, correct dataset.

Finding valuable insights in your data is a fundamentally iterative process: a question exposes something curious, which you follow into another question and another. Fifteen questions later, you discover that a campaign is performing poorly for customers who have previously read a whitepaper, or a key call-to-action is below the fold on mobile browsers.

That means the critical variable that determines the power of an analytics workflow is how quickly you can iterate on your analyses. If your event schema is hardcoded in your product, your iteration speed is bounded by the speed at which you can get new logging code shipped and accumulate a new dataset. A Virtual Event schema that lives in your analytics tool can be extended whenever you have a new idea. You can iterate on your analyses at the speed of configuration, as opposed to the speed of code – in a minute, instead of six weeks.

Iteration speed is power. If you can ask questions at the speed of thought, you can actually uncover these gems. If it takes six weeks for each step, you won’t. Through the ability to redefine event definitions and the scope of your analytics queries in minutes, Virtual Events bring the full power of iteration to your analytics process.

Virtualizing Users

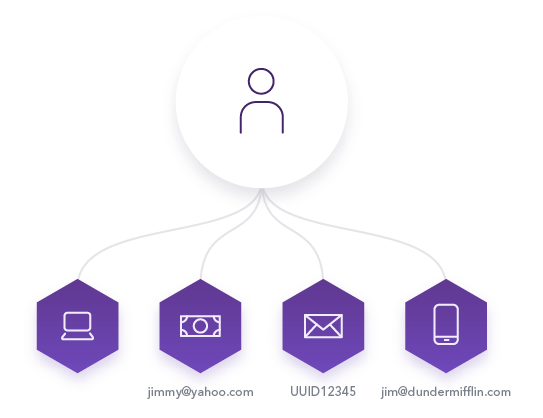

Another key pillar of a useful dataset is a first class notion of user identity. Doing any analysis on users and their behavior requires knowing what constitutes a user. You can’t compute a conversion funnel between View Homepage and Sign Up if you can’t connect these events to a single person. What are the rules by which your dataset should determine whether multiple sessions belong to the same person?

To make it possible to represent end users in a coherent way, most analytics tools give you an API that lets you tag a user with an identity. Any two users who get the same identity will be merged. This is a powerful primitive for cross-device tracking. But this approach has the same problems that come with hardcoded events: the logic that specifies identities lives in your app. It’s impractical to iterate on and impossible to audit.

A subtler problem is that this model is not powerful enough to represent a lot of real-world user flows. Let’s say you run an e-commerce site in which users can make purchases with a guest email address, or they can log in to make a purchase. Should the “identity” be the guest email or the post-signup username?

Either choice turns out to break the dataset. If you use email addresses, you won’t be able to correlate logged-in activity across different devices. If you use the username, you won’t be able to correlate guest checkouts from the same email across devices. If you use a combination of the two, your users’ identities will change right at the point where they log in or sign up. That means you can’t compute conversion across one of the most important boundaries in your product!

The right answer for constituting a user is that there is no single user identity. The correct behavior is for all activity on a username to show up on one user, along with any guest activity on any email associated with that user. If a user signs up and associates an email, and then later logs out and does a guest purchase with the same email, that guest purchase should appear on the same user. Your analytics platform should abstract away the complexity of these identity relationships and present a single user record for the purposes of your analyses.

It turns out this kind of use case is quite common. A financial services lending website has a different notion of identity at the quote stage, the active loan stage, and the client stage. A SaaS product has leads which become opportunities which become customers, or which become lost deals which may become opportunities again in six months. Heap is the only analytics tool that can model users in which there is a different notion of identity at different points in time.

An abstracted concept of user identity is just step one; this needs to be virtualized too. Data teams need to be able to change these identity configurations in response to new questions and shifting landscapes. These updates should likewise be retroactive and non-destructive. What if we need to add a self-serve flow to our sales pipeline, in which users won’t become opportunities at any point? Maybe we accidentally combined a big swathe of users – can this be undone? What if I would like to analyze accounts, not users – can I do so flexibly and retroactively? Or do I need to hardcode an account key in my tracking code?

Present-day Heap requires you to write code that uses our APIs if you want to make these kinds of changes to how you model your users, but it’s only a matter of time until you can iterate on your user model retroactively as well.

Virtual Users

Virtual Users

Combining Datasets

Your website or app probably uses dozens of third-party tools that have salient data about your users. Their payments live in a payment processing tool. The A/B test variants they’ve seen live in Optimizely. The support tickets they’ve filed live in Zendesk, and so forth. To get a complete picture of user behavior, you need to bring all this data together.

With our Sources product, you can ingest event data from 18 tools and counting. This enables an enormous variety of new analyses – How did my homepage A/B test variants affect repeat purchase behavior? How much revenue can be attributed to this email campaign? – and so forth.

Other tools have treated the problem of scattered datasets as one of staying on top of disparate APIs and papering over their differences – i.e., as a question of software engineering and ETL. This is an important first step when incorporating third-party data into an analytics product. But it misses the all-important step of connecting third-party events to users in the dataset. Without that, you have a glorified bag of unrelated tables with no clear mechanism for using them in concert. This is not a useful dataset.

This problem of mapping events to users is hard. It’s a question of data integration and data modeling, for which the ETL portion is a precondition. It is understandable that other tools have sidestepped the data integration part of this problem. But in doing so, they have rendered the third party data useless unless you are an expert in your underlying data schema and are comfortable writing six-table joins.

In Heap, you specify a rule for how to join your third-party datasets with your first-party data. This makes for a powerful, useful mapping. When doing analyses, the tool presents virtualized user records in which third-party events are integrated, and everything is auditable within Heap. You can use this data without needing to know the underlying APIs or data mechanics.

But in the long term, this data integration configuration should be iterative and flexible too. Let’s say we tag users with an email property and use it to associate Stripe payments with users. If we start additionally tagging users with stripe_ids, we should be able to express rules like: if a Stripe payment comes in, look for a user with the corresponding stripe_id, and if there isn’t one look for a user with a corresponding payment email. Or, if more than one user has a matching stripe_id, route events to the one who most recently performed a Checkout event.

Throughout our product, you should be able to analyze what appears to be a uniform dataset of events and users, but is in fact a virtual dataset, composed of multiple raw datasets and some configuration. That configuration should be non-destructively, retroactively editable – a virtualization layer over your raw datasets.

Why Hasn’t Anyone Done This?

This raises an important question: why doesn’t flexible, virtualized data and analytics exist yet? The answer is that building around a virtual dataset is massively more complicated and has a serious performance cost.

Virtualizing an event schema means capturing 10x as much data. Of that data, 90% will never be relevant to any analyses, because it will never match any Virtual Events. Instead of evaluating queries on top of events that have a hard-coded schema, we need to do so in terms of Virtual Events that are defined as predicates, and those predicates can change at any time.

At a high level, automatic capture and data virtualization require:

Capturing everything that happens, and allowing users to define events on top of that raw dataset.

Supporting complex operations – ad hoc analyses, syncs to warehouses, real-time operations – that use a Virtual Event schema that can change at any time.

Should you weaken either of these requirements, the problem becomes easy and we can deploy existing technology to solve it. If you don’t need to support a Virtual Event schema that’s always changing, you can solve this problem with an off-the-shelf column store. If you don’t need to support complex analyses, and you don’t support syncing Virtual Events to warehouses, you can pre-aggregate your query results. Same if the analyses need to be specified upfront. But any of these degradations means losing most of the power of the tool.

To make it possible to do ad hoc analyses on a retroactive event schema, we at Heap have invested years of engineering development into building a new distributed system on top of proven open source technologies: PostgreSQL, Kafka, Redis, Zookeeper, Citus, ZFS, and Spark.

One of the key ideas is our use of PostgreSQL partial indexes to represent Virtual Events. These are performant enough at write time that we can still deliver a real-time dataset, even with thousands of Virtual Events, because each one covers a tiny slice of the raw dataset. This makes it possible to run analyses over Virtual Events that are performant at scale. They never require modifying the underlying data. It gives us the flexibility and power of a schema-on-read datasystem, with the performance of schema-on-write.

The World is Changing

With new technology, data virtualization is now hard but possible. Mature distributed systems primitives, like Kafka and ZooKeeper, make it possible for small teams to build sophisticated analysis infrastructure. Cloud computing makes it dramatically easier to scale up these new backends. Moore’s law and the inexorably decreasing cost of storage make it possible to analyze this virtual dataset without breaking the bank. These and other factors combine such that we can build systems in 2018 that were unrealistic in 2008 and unimaginable in 1998.

It has taken years of upfront engineering investment on our part to build a data virtualization system that’s fast, stable, scalable, and cost-effective. Over the next two years, we will be building on this core technology to virtualize other key aspects of our customers’ data, to make them more flexible and retroactively modifiable.

In 2020, it will be obvious that there should be a layer of indirection between the semantic data we analyze and the underlying raw data and configurations. The fact that we used to analyze raw clicks with names like checkout_flow_2_new will look absurd.

Creating the right layer of indirection, and building the technology to deliver it effectively, is in some sense the single meta-problem of systems engineering. This is the most important guiding principle in the design and implementation of real-world software systems. Consider obvious parallels to the operating system, which abstracts away low-level hardware and device drivers, or the VM, which abstracts away the high-level hardware, or DNS, which abstracts away machine IP addresses. Software-defined networking is eating networking for the same fundamental reasons. Virtualization will come to datasets – the only question is when.

If you’d like to create the future of data virtualization and build an analytics tool that will deliver on the promise of making data actually useful, we would love to hear from you.