Running 10 Million PostgreSQL Indexes In Production (And Counting)

Heap faces a unique set of challenges based on our approach to analytics: capturing every data point. After several attempts, we’ve managed to come up with a solution that works reliably for our use case. As a consequence of that solution, we have wound up with over 10 million indexes in our database!

What Heap Is

Heap is an analytics tool that captures everything that happens on a customer’s website. After the Heap snippet is added to a website, Heap will automatically capture every event that users do on that website. That means every click, pageview, form submission, etc, are all captured and logged into Heap. From there, the owner of the website can use Heap to answer questions about their users.

All questions in Heap are phrased in terms of “defined events”. An example of a defined event would be “Login”, which could be defined as a click where the text of the click target is “Login”. The event definition may also make use of more complicated restrictions such as requiring the CSS hierarchy of what was clicked on to match the CSS selector div.header button#login. After the login event is defined, it can be used to query all of the login events captured by Heap, ever since Heap was installed. It works just as if you had code that manually tracked logins the entire time.

While queries over historical data based on newly defined events are an amazing feature, they are extremely difficult to implement. We need to store all of the raw data indefinitely, and it is impossible to tell upfront what subset of it will end up being valuable, even though 90% of it will never be useful.

How Heap Solves This Problem

Our users’ queries are built in terms of these defined events, so one of the basic building blocks of our query infrastructure is the ability to find all instances of a particular defined event. We do this by making use of an amazing PostgreSQL feature: partial indexes.

A partial index is an index over a subset of the rows in a table. You define a partial index in nearly the same way you define a regular index; the only difference is that you specify a predicate in addition to the normal options. Only rows that satisfy the predicate will be included in the index. When a customer creates a new event definition, we create a partial index that contains only the events that match the event definition. As an example, if a customer were to define a login event, code similar to the following would be executed in our database:

CREATE INDEX ON events (time) WHERE type = ‘click’ AND text = ‘login’

Since the index contains a given row if and only if the row matches the event definition, we can use it to quickly find all events that satisfy the definition. Additionally, since the index is constructed on time, we can simultaneously use the index to filter for only the events that occurred in a given time range.

Once we are able to find instances of an event definition that are in a given time range, it becomes easy to implement more complicated queries such as graphs and conversion funnels. Let’s take a moment to look at how funnels are implemented. Let’s say a customer wants to know what percentage of the users who have signed up in a given week later made a purchase that same week. This would be implemented with a SQL query similar to the following:

This query works by first finding all events that match the login and purchase events that fall into the time range of the query. This can be done by making use of the partial indexes for the events. From there, the query constructs an array of zeros and ones for each user representing the order in which they did the events. In the array, a zero represents a signup and a one represents a purchase. For example, the array [0, 1, 1] would mean they signed up, later made a purchase, then after that made another purchase. In the case of an N step funnel, the numbers would range from 0 to N-1.

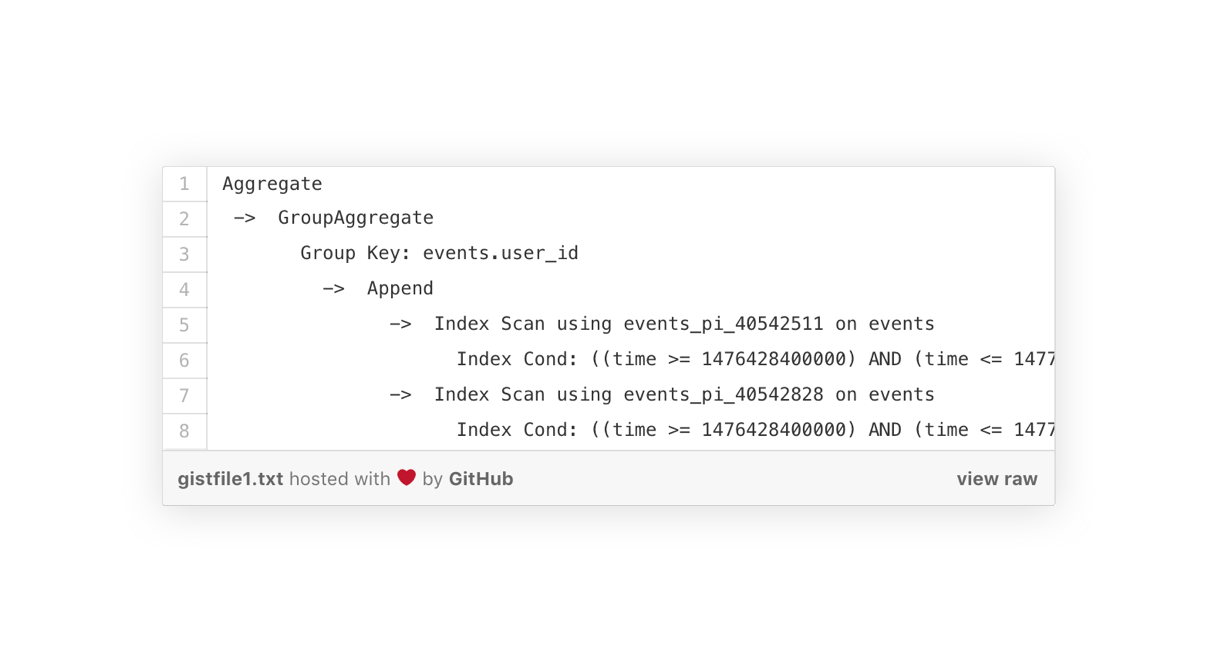

The query then passes those arrays through a PostgreSQL UDF we wrote in collaboration with the team at Citus Data. The UDF takes one of the arrays representing the order an individual did the events and returns an array that represents how far they got in the funnel. In the returned array, a one represents that the user completed that step, and a zero represents that they did not. The array [0, 0] would mean the user never signed up, the array [1, 0] would mean the user signed up but never made a purchase, and the array [1, 1] would mean the user completed the entire funnel. From there, the query sums all of those arrays to obtain an array with how many users made it through each step of the funnel. While the query looks and sounds complicated, the plan generated by the query is actually really simple since it makes use of the partial indexes:

It just fetches all of the events by using the two relevant partial indexes, groups the events by user, and performs an aggregation over each.

Advantages Of Partial Indexes

A great feature of partial indexes is that Postgres will automatically maintain them for us, and they will always be consistent with our raw data. Whenever a new raw event comes in, PostgreSQL will automatically check the raw event against all of the existing partial indexes. When the event satisfies the predicate for one of those partial indexes, the event will be inserted into that index.

Another nice property of our indexing strategy is that it degrades gracefully. If a partial index exists, PostgreSQL will automatically use it. If we haven’t yet had time to create a partial index, PostgreSQL will fallback to the next best strategy. While the query will be slower when the partial index doesn’t exist, it can still be executed and will still be correct. Once the partial index is created, PostgreSQL will automatically start using it, and the query will become much faster.

What really makes partial indexes so great is that PostgreSQL handles all of the work around building, maintaining, and querying the partial indexes for us. Since partial indexes are so easy to create and work with, we’ve wound up with over 10 million partial indexes across our entire cluster.

This isn’t as scary as it sounds for a two main reasons. First, we shard all of our data by customer. Each table in our database holds only one customer’s data, so each table has a only a few thousand indexes at most. Second, these events are relatively rare. The most common defined events make up only a few percent of a customer’s raw events, and most are much more rare. This means that we perform relatively little I/O maintaining this schema, because most incoming events match no event definitions and therefore don’t need to be written to any of the indexes. Similarly, the indexes don’t take up much space on disk.

Downsides Of Partial Indexes

Although partial indexes work well for our use case, there are a few downsides to having millions of them across our entire cluster. The biggest cost is the amount of CPU required to build and maintain them. If a Heap customer has a thousand defined events, we have to evaluate all of the predicates for those defined events for every new event that comes in. Most of these event definitions contain a regular expression that is used to match the CSS hierarchy of the event, which are expensive to evaluate at our scale of data.

We’ve also run into some issues around creating the partial indexes. Ideally, we would use CREATE INDEX CONCURRENTLY to create the partial indexes in the background, without impacting writes to the table. Unfortunately, PostgreSQL can only perform one concurrent index build at a time per table. That means when sent multiple CREATE INDEX CONCURRENTLY commands on the same table, PostgreSQL will execute the index builds in series. This is in addition to concurrent index builds taking several times longer than normal index builds. Another issue we’ve repeatedly run into is that a concurrent index build must wait for all existing transactions to finish. That means if any long running query is in flight (e.g. any customer is bulk-exporting their data), every index creation will have to wait for that query to finish.

Because of the issues around building indexes concurrently, we need to use the normal CREATE INDEX instead of CREATE INDEX CONCURRENTLY. To handle the inability to write to a table while a normal index build is taking place, our data ingestion pipeline will defer writing a customer’s events to PostgreSQL, whenever we are creating indexes for that customer. Even when running multiple index builds in parallel it can still take a long time to create of the indexes. Building all of the indexes for a table of one of our largest customers can take a full 24 hours! Interestingly, partial index creations are CPU bound. This makes sense since PostgreSQL has to evaluate the partial index predicate against every row in the table in order to construct the partial index.

Partial indexes are a great fit for our use case. With this feature, our customers are able to query hundreds of terabytes of data in seconds. All we have to do is send the CREATE INDEX command to PostgreSQL and it handles the construction, maintenance, and querying of the partial indexes for us.

If you want to learn more about Heap's infrastructure, check out this talk where Dan discusses our backend in depth. Dan will also be speaking at PGConf SV 2016.

If all of this sounds interesting to you, Heap is hiring so please apply or reach out to Heap on twitter.

Additional resources: