Step Suggestions: How We Take the "Guess and Check" Out of Building Funnels

I’m a data scientist at Heap, and there are two reasons I love my job.

One is that I’m productizing myself: I’m looking for features that can automate the kind of analytics work I used to do for one product at a time. A second is that as I’m developing these tools, I get to do what I’d call high-throughput data science, where I apply an approach to thousands of product problems at once and discover how often they’re useful and in what situations they fail.

I got to do both of these kinds of work while building the Step Suggestions feature, part of our Heap Illuminate suite. I’ll share how we took a product analytics process — specifying a funnel of steps — that I used to do through “guess and check”, and partially automated it using statistical principles. I’ll also show how we benchmarked this feature across thousands of product problems to show just how powerful this feature can be.

Our Step Suggestions Feature

Funnels are a powerful tool in product analytics for measuring how users proceed through a multi-step flow, and where they drop off.

Let’s say we wanted to understand how users progressed through a simple form, like a signup page. Improving the conversion rate of a flow like this is usually a key business goal. To measure this flow with a funnel, you select a sequence of 2 or more steps, and see how many users make it from one step to the next.

If we want to use this funnel to guide our product improvements, we’ll usually get more value if we break it up into multiple steps, so we can understand at which stage of the flow users are dropping off and can prioritize our engineering effort. We know our clients get a lot of value from multi-step funnels: of the 1000 funnel reports on Heap most visited by clients this year, 73% have at least 3 steps, and 25% have at least 6!

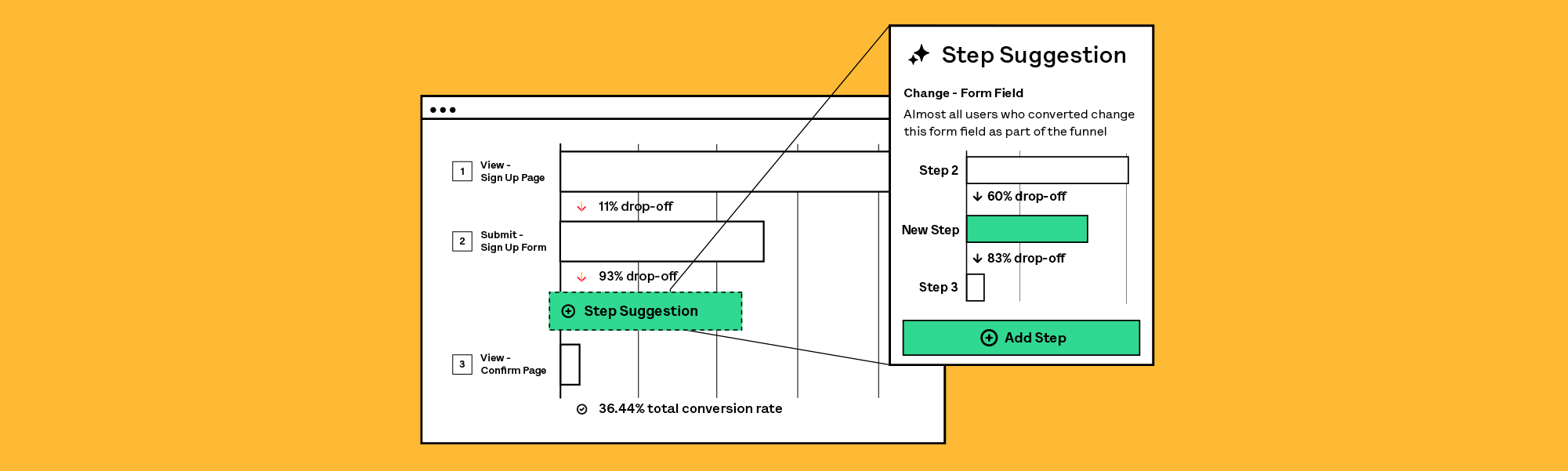

To help build these multi-step funnels, we’ve launched Step Suggestions. This feature automatically scans across user behavior within a funnel and suggests new intermediate steps that break the funnel down into understandable steps.

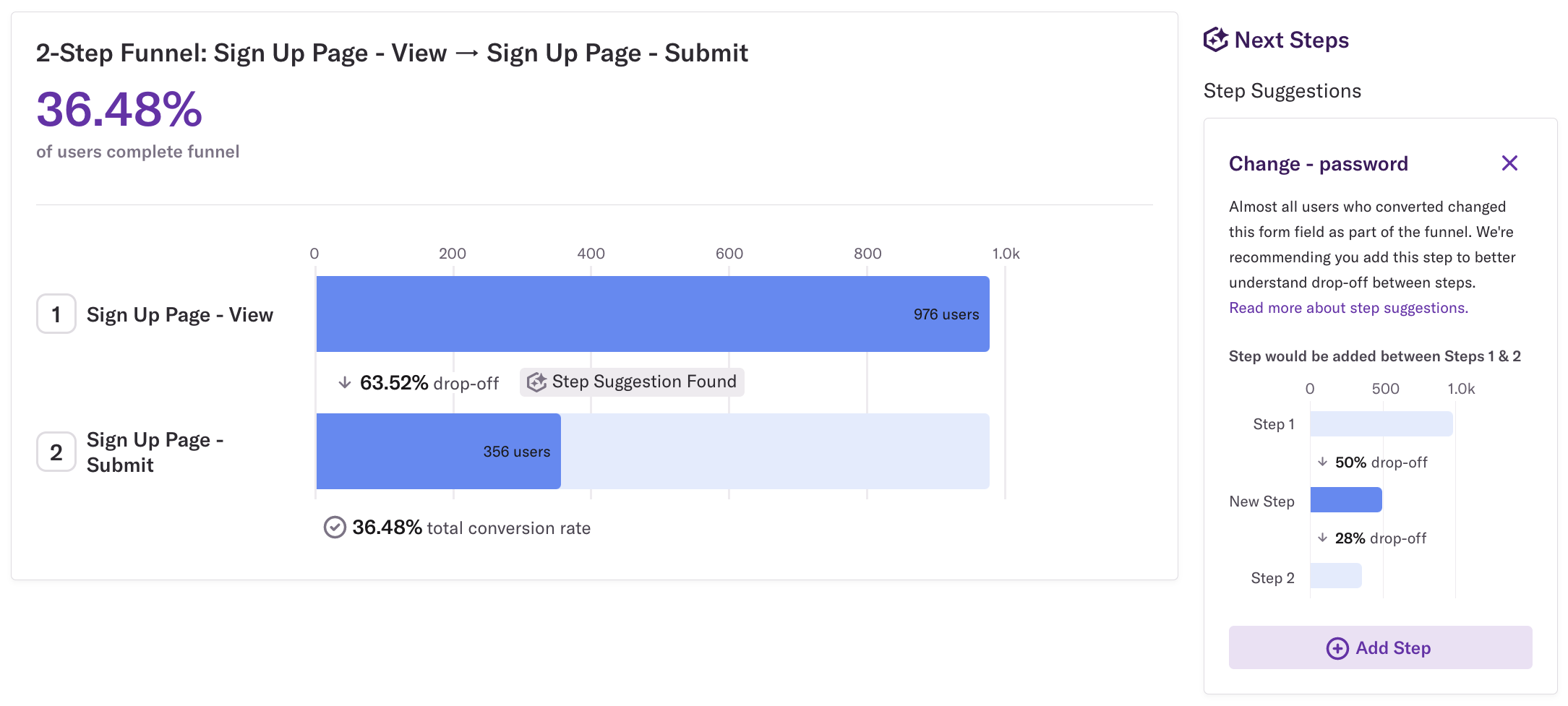

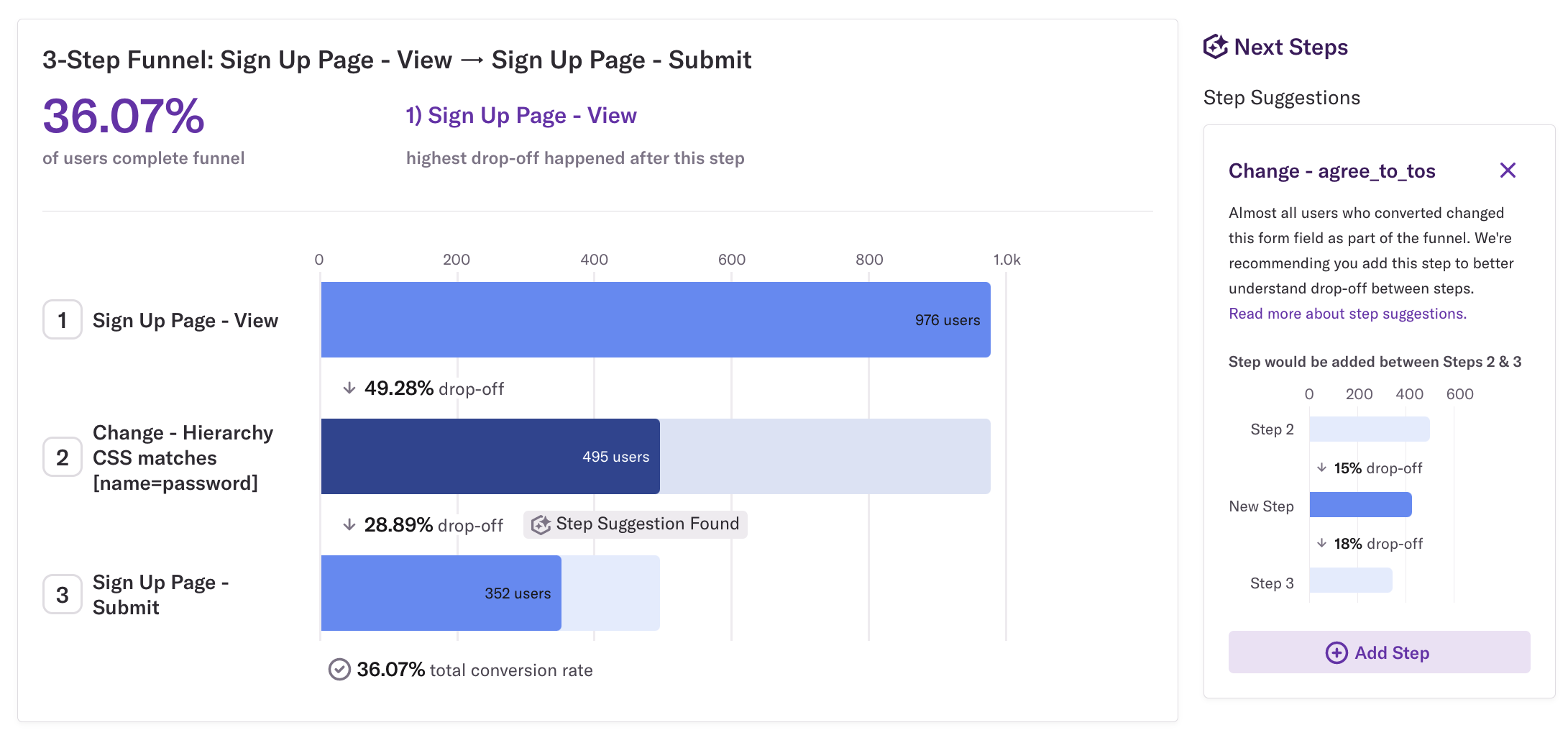

This shows us we can add a new “Change - password” event- the step where users change the “Password” field to choose a password- and create a new 3-step funnel. If we accept that suggestion, we see:

We now know most users are dropping off before entering a password. On the right we also see a new suggestion for a 4th step to add, letting us build up a detailed funnel and fully map out the flow.

How do we automate adding these steps?

If you’ve done product analytics work, you’ve likely built a lot of multi-step funnels through “guess and check”, and you probably have a feel for what makes a good step. But to automate this suggestion process, we had to codify this into quantitative criteria. Exactly what are we doing when we’re judging the quality of a step?

After partnering with clients to analyze dozens of funnels in depth, we zeroed in on two quantitative rules:

A good funnel step is ubiquitous: almost no users reach the next step without performing this action.

A good funnel step is divisive: some users drop off before performing the step, and others drop off after.

Let’s dig into what those criteria mean.

Ubiquity: Everyone who completes the step does this along the way

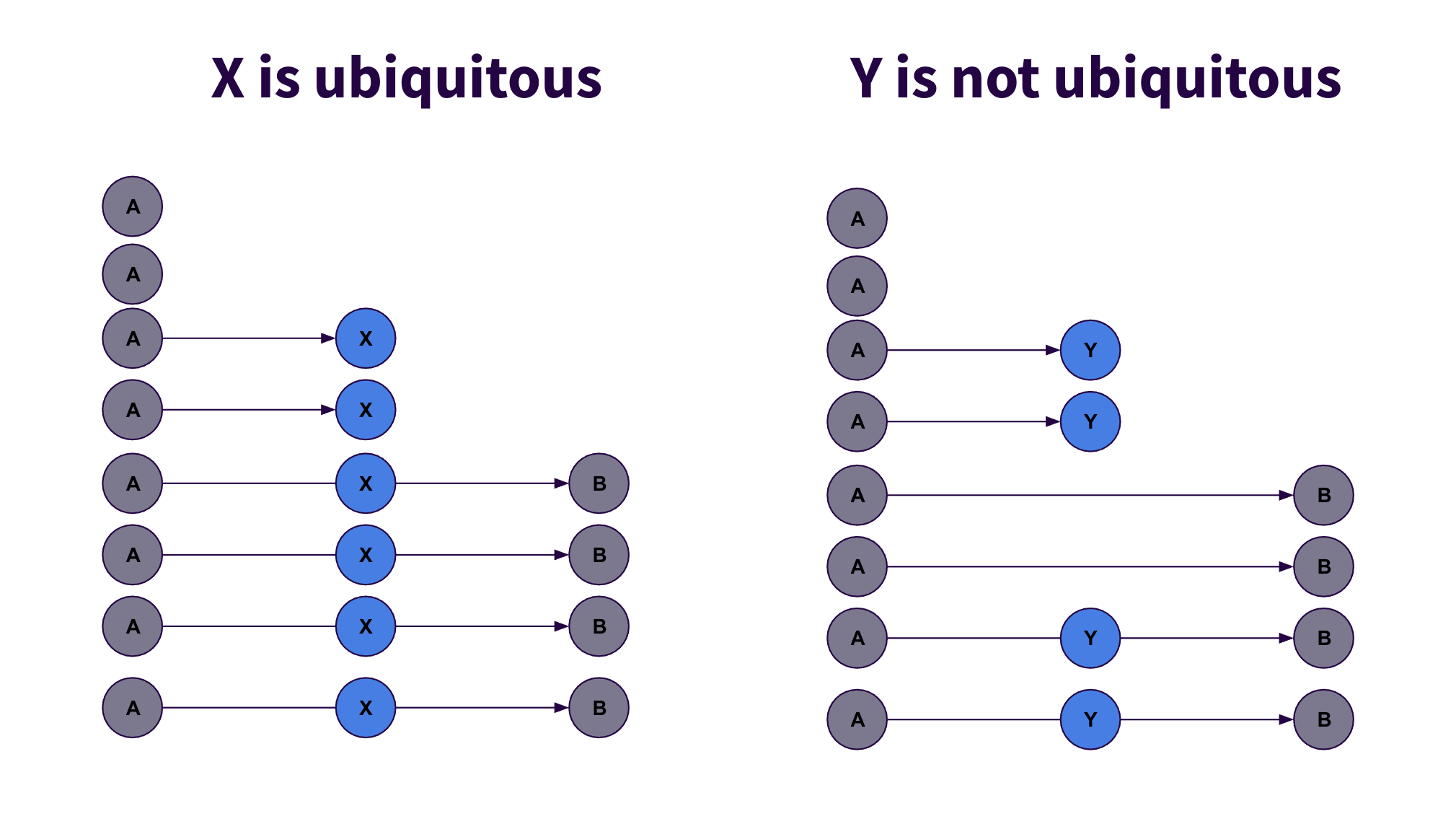

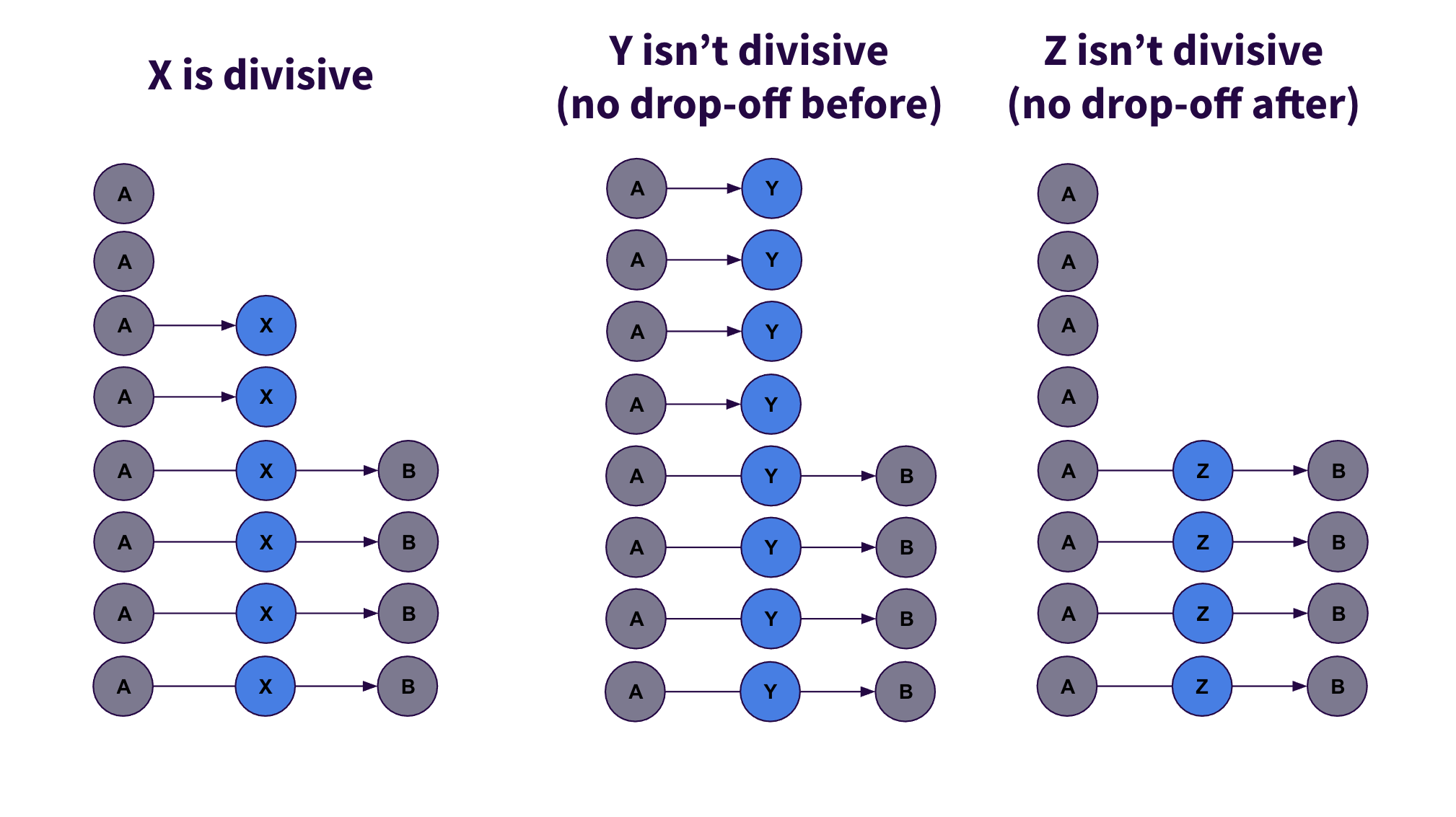

Consider the funnel below, where 8 users start at A and 4 users make it to B (conversion rate = 50%). We’re comparing two intermediate steps we might add, X or Y.

X is ubiquitous: every user who gets from A to B has to do step X in between.

X might be a step like “Enter Password” or “Click Add to Cart”: something that users have to do along the way.

Y is not ubiquitous: only 50% of the users who get from A to B do Y in between.

Y might be a step like “Subscribe to Mailing List” or “View Warranty”, something that users can skip while still completing the flow.

Adding Y as an intermediate step would be disastrous to your funnel. Since a funnel requires users to complete each step along the way, it would change your conversion rate from 50% (4 completers) to 25% (2 completers).

To qualify as a suggestion, we set a threshold that at least 97% of completers need to have completed the step.

Divisiveness: Some users

Once we know a step is ubiquitous, we know it won’t hurt the funnel to add it. But will it help? What makes a useful funnel step? To quantify that, we’ll introduce the metric of divisiveness, calculated as the smaller of the drop-off before and the drop-off after.

X is a good milestone: 25% of users drop off from A to X , and 33% drop off from X to A (divisiveness = min(25%, 33%) = 25%).

Y isn’t a good milestone: 0% of users drop off from A to Y (divisiveness = min(0%, 50%) = 0%).

Z isn’t a good milestone, 0% of users drop off from Z to B (divisiveness = min(0%, 50%) = 0%).

With Y or Z, you’re gaining no new information: they’re basically synonymous with the start or end of your funnel. The purpose of a multi-step funnel is to identify opportunities for improvement, but these milestones leave “no room for improvement” on one side. An example of a step suggestion that often isn’t divisive is a “Submit” button at the end of a form- if 100% of people that click that button reach the next page, then it’s not worth adding a step to break that down.

Why are Step Suggestions valuable?

As I described earlier, the second thing I love about my job is we get to do high-throughput data science. I don’t mean working with large amounts of data; I mean that we’re working with a large number of analogous problems.

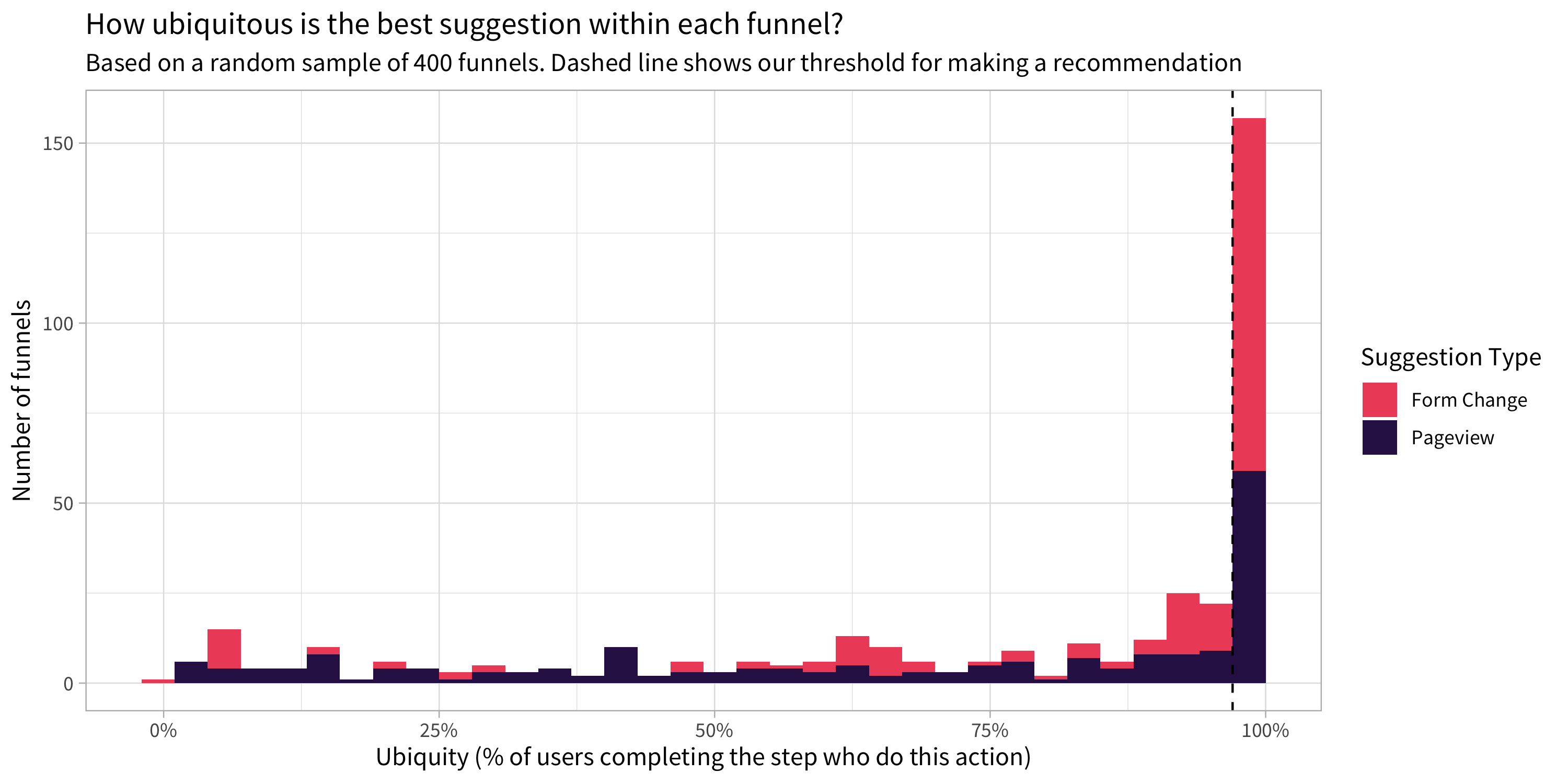

Heap is used by thousands of companies to examine funnels in their product. This means we can examine how many funnels would get a new step, and how divisive each of those new steps would be. This has helped us validate this feature and work out kinks in our approach.

Many funnels get automated Step Suggestions

If a funnel already broke down a flow as parsimoniously as possible, then the feature wouldn’t add anything. But we discovered that many Heap funnels could gain information from an intermediate step: 38% of the funnels run in Heap get at least one suggestion.

We can see just how ubiquitous these new suggestions are. A large number of funnels had at least one step that everyone performed along the way (either a pageview or a form change) that they hadn’t yet included:

As we discussed, intermediate steps are especially useful if they’re divisive: if they split an existing step such that some users drop off before, and some drop off after. That turns out to be true:

78% of Step Suggestions split into two steps that each have at least 10% drop-off

16% of Step Suggestions split into two steps that each have at least 50% dropoff

Of our eventual suggestions, 41% are form field change events, like entering a password or checking a checkbox. This is an advantage of Heap’s product; we capture every click and form field change so that we can break down the details of where users drop off.

If you add intermediate milestones through guess-and-check, it’s easy to make a mistake

Even without this feature, you can always add steps yourself based on your knowledge of the product. But it’s surprisingly easy to accidentally add a step that isn’t actually required.

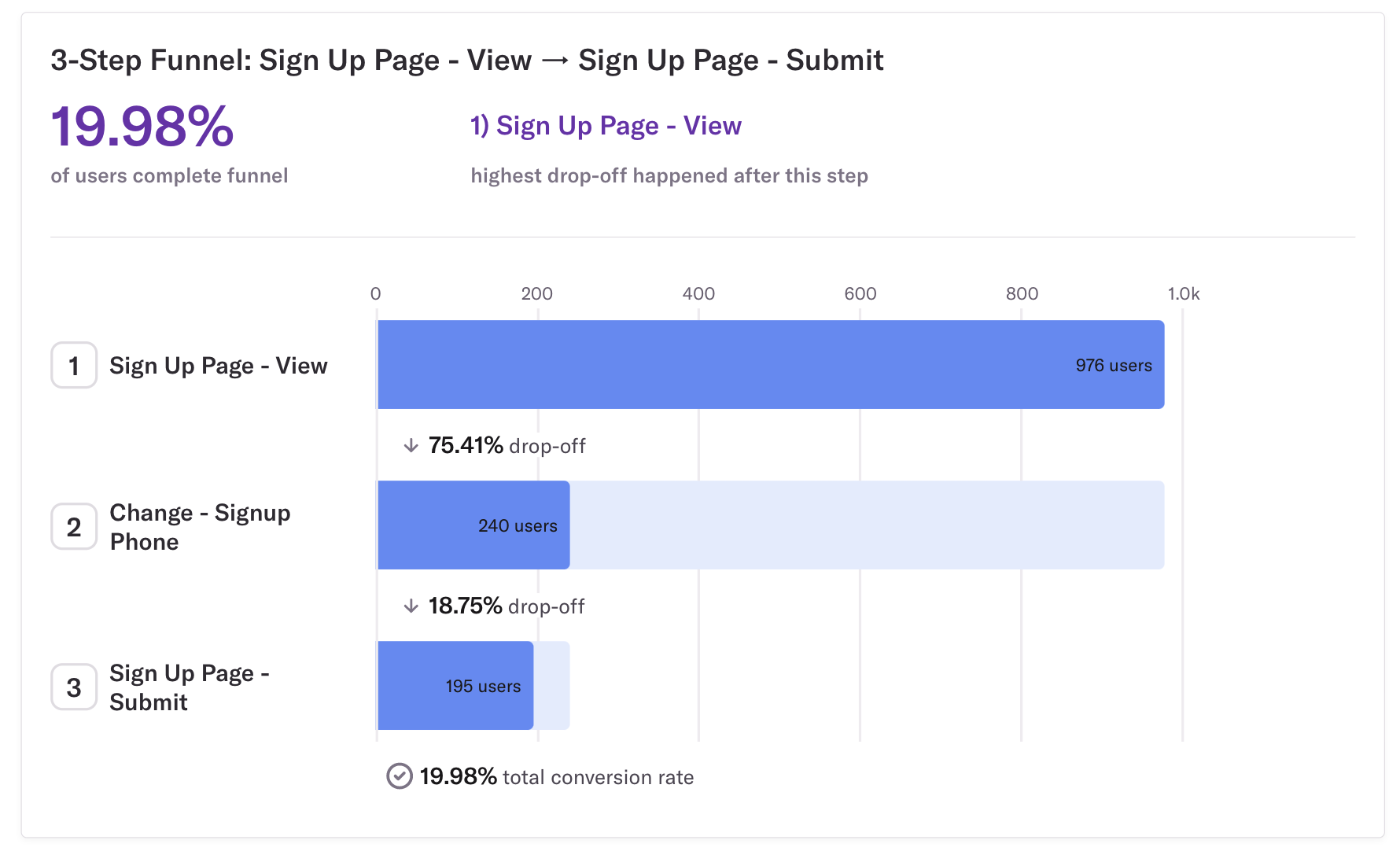

For instance, in the login form we examined earlier (which had a conversion rate of 36%), entering a phone number was an optional step. Suppose we’d added it to the funnel anyway:

We would have measured the conversion rate as 20%, way lower than the real conversion rate. Hundreds of users successfully completed the signup process while our funnel was still patiently waiting for them to enter a phone number!It’s surprisingly easy to add a skippable step to a funnel without realizing it. We can see from our high-throughput screen of Heap’s funnels that this is a common issue.

16% of funnels with at least 3 steps include a step that more than 25% of users skip!

In 12% of funnels, a skippable step would change the answer to the question “which step has the highest drop-off?” This means you could focus on improving the wrong part of your flow.

Removing the “guess and check” from a process like building funnels doesn’t just mean reaching the same results more quickly. It can guide you towards more accurate results, avoiding mistakes that substantially affect your conclusions.

Looking for more ways to get proactive insights?

Step Suggestions pairs well with Effort Analysis as two of our newest proactive insights features. As you discover which steps involve more user effort than you expect, you can break those steps down to focus on more and more specific parts of your flow.

In future posts we’ll be sharing how more of our proactive insights features work! And if you’re an engineer who’s excited about building tools like these to power the next generation of product analytics, we’re hiring!