Creating PostgreSQL Arrays Without A Quadratic Blowup

At Heap, we lean on PostgreSQL for most of the backend heavy lifting.[1] We store each event as an hstore blob, and we keep a PostgreSQL array of events done by each user we track, sorted by time. Hstore lets us attach properties to events in a flexible way, and arrays of events give us great performance, especially for funnel queries, in which we compute the drop off between different steps in a conversion funnel.

In this post, we’ll take a look at a PostgreSQL function that hangs on large inputs and rewrite it in an efficient, idiomatic way.

If you’re writing a PL/pgSQL function that returns an array, it can be tempting to create an empty array and build up results in a loop with [array\_append] or [array\_cat]. But, as is often the case, procedural idioms in a relational database result in bad times.

Consider an example function in which we create an array of hstores such that the entry at position i is "num=>i".

add blowup.sql git

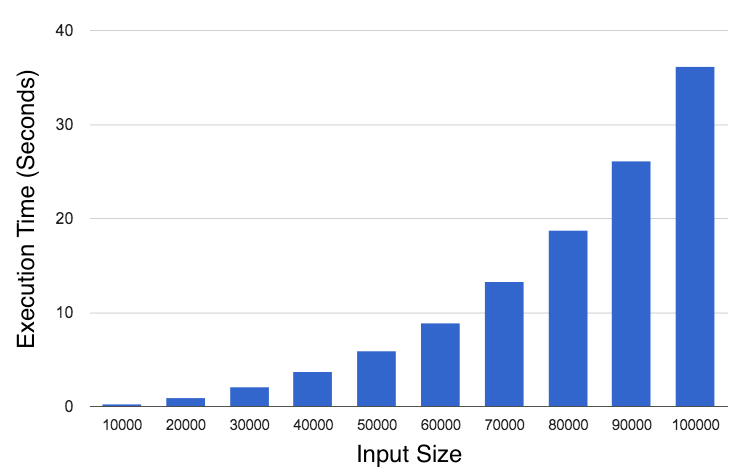

Looks simple enough, but this is bad news. This takes a quadratic amount of time to run, blowing up to 36 seconds to generate an array of 100k elements.

Test queries were timed on a MacBook Pro with a 2.4GHz i7 and 16 GB of RAM.

What’s going on here? It turns out the repeated calls to array\_append cause this quadratic explosion. When we call result := array\_append(result, ...), PostgreSQL allocates a new array that’s wide enough for the result of the array\_append call and then copies the data in. That is, array\_append(array, new\_element) is linear in the length of array, which makes the implementation above O(N2).

A lot of languages handle this idiom more gracefully. A common strategy is to resize the array that backs a list to double the existing size. With a list written this way, repeated appends would only require us to execute the “grow the array and copy over your data” operation a logarithmic number of times, and our amortized runtime would be linear.

So, PostgreSQL could be smarter, but this is not an idiomatic implementation, so we shouldn’t expect it to be. The correct way to do this is with [array\_agg] — an aggregate function that takes a set and returns all of the entries as an array.

This is fast, and it scales linearly. It takes 300 ms to generate an array of 100k elements — a 100x reduction. This query allows PostgreSQL to generate the complete set of results before materializing any arrays, so it doesn’t need to do so more than once. PostgreSQL can compute upfront exactly how long the resulting array needs to be and only needs to do one allocation.

Lesson learned: if you find yourself calling array_append or array_cat in a loop, use array_agg instead.

When you’re working with any relational database, you should reconsider any procedural code you find yourself writing. Also, it helps to have an intuition for how long something “should” take. Generating a 100k element array (with around one megabyte of total data) shouldn’t take thirty seconds, and, indeed, it doesn’t need to. We like learning stuff. Have any feedback or other PostgreSQL tips? Shoot us a note @heap.

[1] In particular, we use a lovely tool called Citus Data. More on that in another blog post!