What is Product Quality? A Practical Definition

“Let’s build a low-quality product,” said nobody ever. Yet, somehow, the world is full of them. You’re probably painfully aware of the ways in which your product isn’t high-quality, and you may have even made the decisions that got it there.

Those trade-offs are necessary, usually painful, and often difficult to navigate: how do you know when to compromise on product quality vs. insist on it? How can you ensure you’re doing it deliberately vs. by accident? How do you stand tall and defend those decisions in the face of entirely reasonable criticism from users and leaders alike?

At Heap, we’ve been thinking a lot about these questions. We’ve spent the past year and a half building up our product team, design team, and product development process, and it’s improved the product quality of new features. We’d like to take that further—to increase new-feature quality more, and to bring pre-existing functionality up to the same bar.

Of course, that’s easy to say and hard to do. How do we know it’s a worthwhile priority? How do we operationalize it—turn platitudes into process?

To begin with, it’s worth nailing down an actual definition of “product quality.”

What is product quality?

Product quality describes the degree to which we elegantly meet user needs, and encompasses the following:`

Complete: For a given feature, did we solve a real problem in a complete and effective way? Or does the target user regularly have to hack her way around its deficiencies or lean on our support team? (Note that this means we have a clear definition of our target user.) Does adding it as designed leave our product in a state that feels whole and consistent?

Opinionated: Did we embed our understanding of what the target user will want to do and how she’ll best accomplish it? Are we clear with our recommended / default next action? Were we willing to inconvenience less-important personas to support more-important ones?

Usable: Can our target user figure out how to use this without undue cognitive load? Is our terminology, iconography, and flow consistent with user expectations? Do clickable elements have clear, consistent affordances? Does it conform to known usability heuristics? (Note that usable isn’t always the same as discoverable. One doesn’t pick up Photoshop without training, and that’s fine—it’s a pro tool. The same expectation doesn’t hold for Apple Photos.)

Polished: Are colors, fonts, and dimensions consistent with our design system and is everything aligned? Are icons and images rendering properly? Did we use animations to preserve context? Do actions provide effective feedback? Did we write grammatical and sensible copy? Is it stable?

Efficient: Does our solution feel fast and responsive? Can the task be completed with minimal cognitive load and low effort? This is partly about actual performance (latency, response times, number of steps) but also derives from UX details and other factors in this list.

Note that this spells COUPE, which means I’ve invented the COUPE Framework! Get ready for some webinars.

When does Product Quality matter?

Intuitively, everyone likes the idea of a high-quality product, but is the required investment worth making? In truth, it matters more for some businesses than others. It’s most important when:

You exist in a highly competitive market—the market is in the middle of its maturity curve. What you do is well-defined and somewhat commoditized, and you have plenty of competition. Over time, low product quality can lead to churn given the existence of robust competitors; and high product quality can serve as a meaningful differentiator.

End users are heavily involved in purchase decisions. This is true for B2C software and some SaaS businesses, but less so for traditional enterprise tools where the purchase is made top-down via a central IT department.

End users arrive with expectations built in other markets where product quality is high. This is increasingly true in the tech startup world: tools that are popular there like Slack, Dropbox, and GitHub have a reasonably high quality bar (in part because they pursued a bottom-up sales model), generating environments in which lower product quality can stick out like a sore thumb.

If these don’t apply to you and you still value product quality, don’t despair! I’ll argue that everyone should strive for a baseline of at least decent product quality. If you want a business case for that, it’s that truly bad product quality will catch up with you eventually—in support costs, in poor customer satisfaction, in vulnerability to competitors—and the longer you let it go on, the harder it is to rectify. And business case aside, do we really want to bring more bad products into the world?

How do we measure product quality?

Product quality can be difficult or even impossible to measure because causation is hard to trace. For instance: earlier this year, we spent several months updating and refining Heap’s look & feel. It was a real win for product quality, but did it move our fundamental business metrics? Tough to say—so much else happened at the same time. The right way to measure it would be via an A/B test over an extended period of time, but the changes were systemic enough that, over the length of that test, we’d effectively be building all new functionality twice.

So while it’s reasonable to assume that well-directed product quality efforts can improve the business, it’s often not practical to measure that link. But you can measure product quality itself:

Heuristic or expert evaluation. When you hire designers, you’re hiring experts in recognizing product quality. A heuristic evaluation is a powerful, subjective, low-cost way to use that expertise: a designer, alone or (even better) along with her cross-functional team, audits the experience with the help of established UX heuristics. This may seem simplistic, but in many dimensions it’s the most effective way to measure product quality.

Usability testing can tell us about Usability, the cognitive components of Efficiency, and, to some extent, Completeness and Opinionatedness. It’s useful at multiple stages of the design process. (Note, however, that Usability testing is a nuanced skill that not all designers possess; and that it can’t tell you what to build—only whether what you’ve built is well-designed.)

Usage metrics can tell us about completeness and, to some extent, Usability over time. Given Heap is a product analytics company, it may seem odd that this just one bullet point amongst others. Understanding user behavior is critical but isn’t the whole picture: it gives you the what but not the why. For instance, ongoing retention for a particular feature is a valuable signal about its utility and Usability, but doesn’t differentiate between those two or tell you whether your actual user matches your target user.

Customer satisfaction metrics can catch egregious issues but are imprecise. Properly-timed and -constructed surveys can complement these on a feature-by-feature basis.

Performance metrics (load times, latency) and QA can tell us about stability and performance and thus provide signal on Efficiency and Polish.

Putting a Number on It

While product quality is often subjective, it can be valuable to discuss numerically so we can make comparative statements.

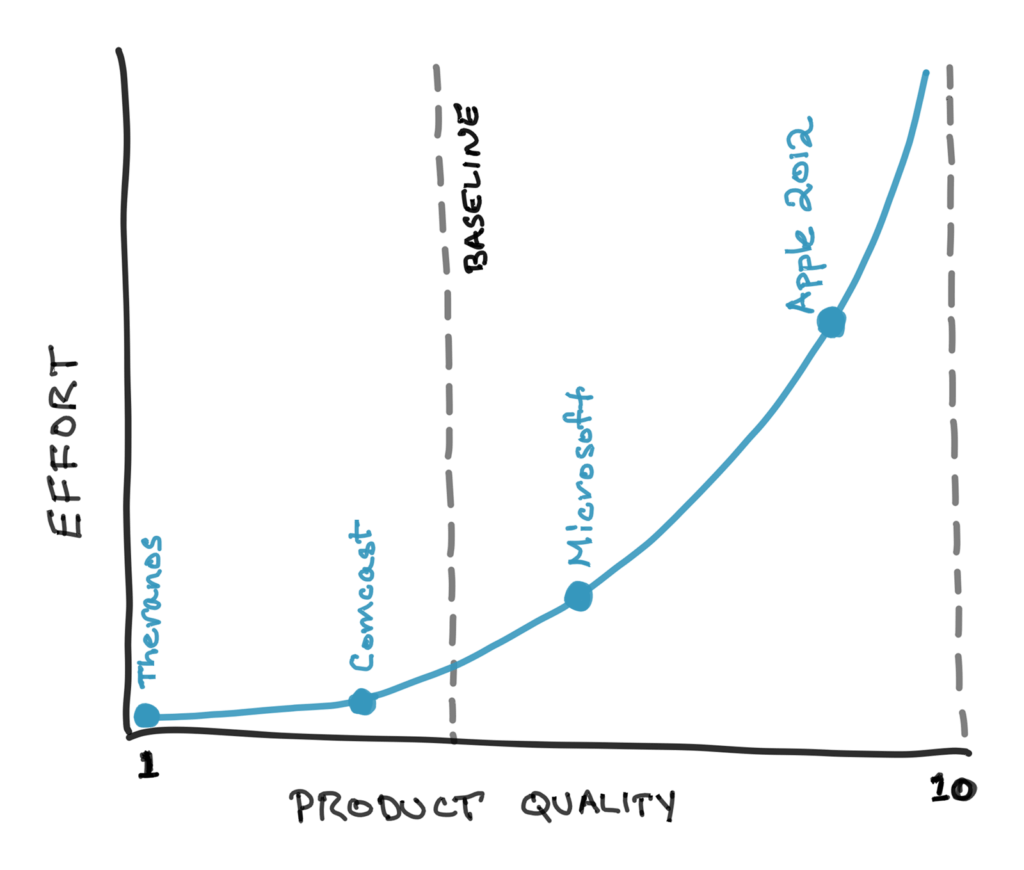

So, let’s give it a ten-point scale. 10 is perfect, and perfect is effectively impossible, so treat it as an asymptote. Theranos might be a 1, Comcast a 3, Microsoft a 4-5, and Apple in 2012 an 8.5. (Insert rant about Apple’s recently-slipping product quality.)

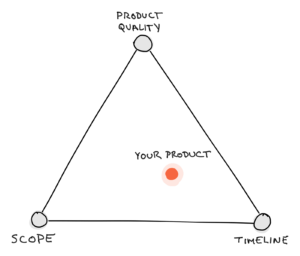

Where should you be? That’s up to you. The baseline of at-least-decent is somewhere around a 4. Beyond that, it comes down to trade-offs—typically between product quality, scope, and timeline. You can boost any of those three at the expense of the others. Your organization probably has axioms (explicit or implicit) around scope, timeline, and even code quality…what’s your axiom for product quality?

It’s also worth noting that your target product quality should be release-dependent. Modern software-development methodologies value iteration, experimentation, and agility. Product quality can work against these if it’s overemphasized too early. Specifically:

Your minimum viable product (MVP) exists to test a hypothesis, not to ship a great product. Indeed, some forms of MVP—painted-door tests, concierge MVPs, prototypes—don’t live in your codebase at all. For those that do, the bar of product quality should be as low as possible without endangering the test, where you can often compensate for low quality with handholding. That assumes, of course, that you’ve hidden your MVP from all but a select, opted-in group of test users (e.g., via feature-flagging platforms like Optimizely or LaunchDarkly).

A public beta still has an experimental component to it: Will this feature deliver on its early promise? Here, you’ll need high enough product quality that you don’t erode the overall experience (or sabotage the feature in question), but you might compromise a bit on Completeness, Polish, and/or Efficiency—provided you follow through and don’t let your beta become a de facto v1.

How do we improve product quality?

So you’ve assessed both your current and target product quality. How do you go about hitting that target?

Be axiomatic. Given the difficulty of tying product quality to business metrics, it’s necessary to treat it as axiomatic in and of itself. If we always ask, “OK, but do we really think that extra two weeks will make a difference to revenue?” about product quality, we’ll never prioritize it.

Invest in your winners. It’s easy to chase bugs and product/design debt, while letting your successful-but-unfinished features languish. After all, they’re working, right?

1.1 or sunset. For new functionality, make an explicit plan to do a 1.1 release that finalizes product quality on your 1.0. If the launch is successful (or promising), finish it; if not, kill it so the product doesn’t get bloated.

Keep an eye on the big picture. A high-quality product feels consistent and doesn’t expose organizational boundaries. As you build new functionality, take the time to ensure it matches what you had. Sometimes that means constraining yourself to existing patterns; sometimes you’ll have to evolve them. A design system can be immensely helpful here and, once implemented, can increase your front-end velocity too.

Get Opinionated. Be deliberate with choice architecture to bake a “happy path” into the product rather than optimizing for flexibility. This will make things easier for some users at the expense of others, but it’s impossible to build a high-quality experience that’s all things to all people. It does not preclude a more flexible (but less prominent) “escape hatch.”

Validate early and often. To understand what Completeness and Usability look like for your particular project, validate along the way:

Periodic foundational research (open-ended user interviews or field studies) can uncover fundamental user needs, expectations, and behaviors. Done comprehensively it can be time-consuming, but it’s also scalable: even five one-hour, properly-structured phone interviews with customers can teach you a lot.

Usability tests at key stages will give you data on Completeness and Usability as your solution evolves. Be scrappy: don’t build a prototype when a mockup would suffice, or an alpha when you could make a prototype work. But bear in mind, too, that the less participants have to imagine your product, the better the data you’ll get.

Lean on closed alphas and betas for late-stage validation that might save time polishing the wrong thing.

Most importantly, allow time for these things! Increased product quality will decrease velocity in terms of projects completed per time unit: remember those trade-offs among quality, scope, and timeline. You’ll always need to make the trade-offs, but greater product quality requires that the balance shift. That only works if everyone is bought in. And for what it’s worth, in the all-to-common case that your initial hypotheses are incorrect, you might actually save time by changing course earlier.

What’s your take?

While the notion of product quality is as old as that of a product, I haven’t seen an attempt to codify it cross-functionally (i.e., beyond “good design”), or to identify explicit techniques to improve it. So, this framework is new and largely untested. I’d love to hear what you think: does it make sense to you? How has your team approached product quality? What’s worked and what hasn’t?

And of course I’ll end with the usual strained segue and shameless plug: if you’d like to help me define and refine product quality at Heap, I’m hiring product designers and PMs! Drop me a line at dave@heap.io.