Analyzing Millions of Postgres Query Plans

Making Heap fast is a unique and particularly difficult adventure in performance engineering. Our customers run hundreds of thousands of queries per week and each one is unique. What’s more, our product is designed for rich, ad hoc analyses, so the resulting SQL is unboundedly complex.

For some background, Heap is a tool for analyzing customer interactions. We gather data from web, iOS, android, and a growing list of third-party tools such as Stripe and Salesforce, and provide a simple UI our customers can use to query that data.

A core concept in our product is the idea of an “event definition”, which is a mapping between a human-level event like “Signup” or “Checkout” and what that event means in terms of raw clicks. This layer of indirection between the concept being analyzed and the underlying data makes the product very powerful and easy to use: you can add a new event definition and run an analysis over all of your historical data, as if you had been tracking “Signups” since you first installed the product.

But it comes at a cost: everything we do becomes more complicated because the analysis is in terms of a dynamic schema that isn’t fully known until read time. Handling this indirection at all is its own fascinating topic.

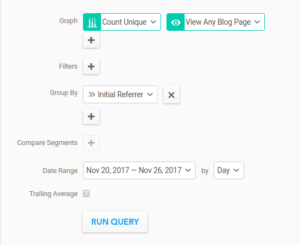

To execute a query, we take the query expressed in the UI, compile it to SQL, inline the event definitions, and then run the resulting SQL query across a cluster of PostgreSQL instances. This process can easily result in incredibly hairy SQL queries. As an example, consider this “simple” query, graphing daily unique users who view a blog post, broken down by their initial referrer:

This analysis is about as simple as it gets, and yet it produces this hairy SQL query:

The queries only become more complicated as they make use of the more powerful features we support. A query can include filters on the number of times a user has done a given event in an arbitrary time range as well as break down the results by multiple different fields simultaneously.

For example, we might want to filter the above for users who had never seen a blog post before, or for users who later viewed our pricing page, or break down the above query by the specific blog post viewed in addition to the initial referrer of the user viewing the blog post. This isn’t even getting into any of our other query types such as funnels (measuring the conversion rate between a series of events) or retention (calculating a table showing, of users who did event X, what percentage of users did event Y after 1 week, 2 weeks, etc). Each additional part of the query only makes the SQL more complicated.

Our product is designed for ad hoc, interactive analysis, as opposed to pre-canned dashboards, so in addition to being complex, these queries are typically unique as well. Furthermore, since we shard our data by customer, any query run by customer A will touch a completely disjoint dataset from any query run by customer B.

This makes comparing queries between two customers a fool’s errand since the datasets for those two customers are completely different. Making this kind of product fast is an incredibly difficult challenge. How are we even supposed to determine where we should be focusing our attention to best improve query performance?

When the company was small, we did this by having an engineer go through a sample of slow queries, and rerun them with EXPLAIN ANALYZE. EXPLAIN ANALYZE is a Postgres feature that shows how Postgres executed the query, how much time was spent executing each part of the query, as well as some additional execution information. The engineer would write up a summary explaining why the queries were slow and how they could be improved.

There were two main issues with this approach. First of all, an engineer would only be able to go through a small number of the slow queries. Analyzing these queries one at a time meant that an engineer could go through a few dozen queries at most in an afternoon. This is an extremely small sample of all of the queries we run each week, and is likely to miss issues unless they are very common.

Second, what caused a query to be slow may not be reproducible after the fact. This can happen for a variety of reasons. The query could have been slow due to intermittent high load, or the plan Postgres uses to execute the query can change due to updated table statistics or a number of other reasons. Quite often, we would come across queries that had been slow, but we were unable to come up with an explanation for why.

After realizing how ineffective this “whack-a-mole” performance engineering approach was, we tried to design a better way. What we really wanted was some way we could collect enough information for every query as we initially ran it, such that we can always determine why a query was slow. As it turns out, we did manage to find a way to do that with Postgres.

Postgres provides an extension called auto_explain. When enabled, auto_explain automatically logs the query plan and execution information for every query as it’s run. It’s exactly like EXPLAIN ANALYZE, but automatically records the information for every query as it happens. Depending on how auto_explain is configured, you can obtain some or all of the following information:

How the query was executed.

How long it took to execute each part of the query.

How many rows were returned by each part of the query.

How many pages were read from disk or from cache from each part of the query.

How much time was spent performing I/O in each part of the query.

This is an absolute gold mine of information for analyzing why queries are slow. Importantly, all of this information is gathered as the query is run, so we do not run into issues where a slow query is not reproducible, since we no longer need to reproduce the slowness in order to determine why the query was slow. Even better, auto_explain has the option to obtain the output in JSON. This makes it easy to programmatically analyze the query plans.

To gather all of this information, we wrote a short job that tails our Postgres logs and watches for any Postgres plans. When the job sees a query plan, it sends the plan information to a separate Postgres instance. With all of this information gathered in one place we can easily ask all sorts of questions.

Since we now have the query plan for every single query that was ever executed in Heap, we no longer have this issue of trying to debug a slow query that is no longer slow. On top of that, we can now run analyses get a sense for what causes queries to be slow in aggregate.

Some of the questions we had and were able to answer with this data were:

What percentage of queries over ten seconds spent more than ten seconds performing an index scan that returned more than 10,000 rows? This is looking for queries that had to read a lot of data from disk due to an event that occurred often. *

What percentage of queries over ten seconds spent more than ten seconds performing an on-disk sort? An on-disk sort occurs when a Postgres process does not have enough memory allocated to sort the data in memory so it sorts the data on disk which is significantly slower.

What percentage of queries over ten seconds spent more than ten seconds reading from the

pageviewsindex or thesessionsindex? Session and pageview events are two commonly queried events as, well as two of the most frequent raw events. This makes them a common cause of slow queries.

We also used this information to come up with a fairly exhaustive list of root causes of slow queries. We did so by picking a random slow query, figuring out why it was slow, and then determine exactly how many queries were slow for the same reason. Then we filtered out that cause of slow queries and analyzed the remaining, unexplained slow queries. By doing this repeatedly, we were able to come up with explanations for why >90% of slow queries are slow.

What’s more, we can track how these rates change over time, and prioritize work accordingly. A lot of these causes will require multi-month engineering efforts to resolve, so it is critical that we know upfront how much the overall impact will be on our query performance.

For example, we knew via anecdote that our time-based user segment feature commonly causes on-disk sorts. With this kind of analysis, we were able to determine what percentage of slow queries came from this feature and were slow due to an on-disk sort. This turned out to be less common than we expected, and we deprioritized the work to invest in making this kind of query avoid on-disk sorts in favor of another query performance project.

We’ve found auto_explain to be an amazing tool for determining the causes of slow queries. It allows us to automatically collect detailed performance information about every single query as it executes. We can then use this information to determine how frequent different causes of slow queries are and prioritize what changes we should be working on.

After combing through all of the data, we decided to make use of table partitioning to improve query performance. By partitioning out session and pageview events, we can dramatically improve the performance of queries that require reading large amounts of sessions or pageviews. According to the data we’ve gathered from auto_explain, this directly addresses ~25% of all slow queries.

If all of this sounds interesting to you, Heap is hiring so please apply. If you have any questions feel free to reach out to me on Twitter at @mmalisper.

Interested in learning more about Heap Engineering? Meet our team to get a feel for what it’s like to work at Heap!

* This is 10,000 rows from a single shard. Since our data is heavily sharded, 10,000 rows is a lot more data than it sounds.